Monday, May 30, 2011

Friday, May 27, 2011

The fRiDaY File - Zombie Apocalyse!

...a Memo from the CDC

There are all kinds of emergencies out there that we can prepare for. Take a zombie apocalypse for example. That’s right, I said z-o-m-b-i-e a-p-o-c-a-l-y-p-s-e. You may laugh now, but when it happens you’ll be happy you read this, and hey, maybe you’ll even learn a thing or two about how to prepare for a real emergency... [more]

A Graphical Plan Reading List

Question: I've used Database Tracing In 50 Easy Steps to get a graphical plan with statistics, now what do I do?

How do I use the information in the graphical plan to make my SQL go faster?

Answer: Ha! ...when you find that out let me know, will you?

Seriously, unless you're a member of the iAnywhere Solutions Engineering team who wrote the query optimizer, the question becomes "what looks wrong?

For example, thick red lines are one thing that looks wrong, boxes outlined in red are another, and estimates that are vastly different from actual results are yet another.

Then, the question becomes, "what can I do about the things that look wrong?"

Answers to both questions appear sprinkled throughout the graphical plan documentation in the Help; here's a Reading List for SQL Anwyhere 11.0.1:

- Reading graphical plans

- Analyzing performance using the graphical plan with statistics

- Viewing detailed graphical plan node information

- Node Statistics field descriptions

- Optimizer Statistics field descriptions

- Viewing selectivity in the graphical plan

- Execution plan abbreviations

- Reading graphical plans

- Analyzing performance using the graphical plan with statistics

- Viewing detailed graphical plan node information

- Node Statistics field descriptions

- Optimizer Statistics field descriptions

- Viewing selectivity in the graphical plan

- Execution plan abbreviations

Wednesday, May 25, 2011

Blocking On Demand

Do you ever succumb to "Work Avoidance Syndrome"?That's when you tackle anything except the task you're supposed to be working on......when you check your inbox or Google News or Drudge a thousand times a day instead of working on, well, work.

Right now, the work being avoided 'round here is Foxhound's new AutoDrop feature for automatically dropping ill-behaved database connections that block other connections or have been blocked for a long time.

Instead of that, how about this?

How to easily create blocked connections

First, some setup:

- a few different user ids so it's easy to tell which connection is which when there are a lot of them,

- a table for the different connections to UPDATE and (if they're lucky) grab locks or (if they're not so lucky) get blocked, and

- some rows for the connections to UPDATE.

GRANT CONNECT TO "E.Fernandez" IDENTIFIED BY SQL;

GRANT DBA TO "E.Fernandez";

GRANT CONNECT TO "L.Rodriguez" IDENTIFIED BY SQL;

GRANT DBA TO "L.Rodriguez";

GRANT CONNECT TO "C.Gonzalez" IDENTIFIED BY SQL;

GRANT DBA TO "C.Gonzalez";

GRANT CONNECT TO "S.Johnson" IDENTIFIED BY SQL;

GRANT DBA TO "S.Johnson";

CREATE TABLE inventory (

item_id INTEGER NOT NULL DEFAULT AUTOINCREMENT,

item_count INTEGER NOT NULL,

item_name VARCHAR ( 1024 ) NOT NULL,

PRIMARY KEY ( item_id ) );

BEGIN

DECLARE @row_counter INTEGER;

SET @row_counter = 1;

WHILE @row_counter <= 10000 LOOP

INSERT inventory ( item_count, item_name ) VALUES ( 1, 'Initial load.' );

SET @row_counter = @row_counter + 1;

END LOOP;

COMMIT;

END;

Here's a Windows command file for E.Fernandez to grab and hold locks on three rows...

Tip: You can run SQL statements directly from a dbisql command line without having to open up a GUI window. You can even run more than one statement, even a BEGIN block with local declarations, and you can use the Windows command line separator character ^ to code the statements on separate lines.

Tip: You can use a WAITFOR DELAY statement to prevent a dbisql command line session from terminating right away when all its work is done. That's important in this case because when dbisql terminates it releases all the locks.

ECHO Lock some rows...

START "12.E.Fernandez"^

"%SQLANY12%\bin32\dbisql.com"^

-c "ENG=ddd12;DBN=ddd12;UID=E.Fernandez;PWD=SQL;CON=12.E.Fernandez;"^

UPDATE DBA.inventory SET item_count = item_count + 1 WHERE item_id BETWEEN 1 AND 3;^

WAITFOR DELAY '01:00:00';

PAUSE

Yes, the "E" in E.Fernandez stands for "Evil"... the WAITFOR DELAY statement simulates a long-running transaction: no COMMIT or ROLLBACK for an hour.

Tip: The Windows START prefix on the dbisql command is used to launch dbisql in a separate window. This allows the outer command file to get control and continue processing without waiting for the inner dbisql command to finish. You can read more about START by executing HELP START in a command window. The START prefix isn't really required on the E.Fernandez dbisql command line above, but it certainly is necessary in the next command file.Here's another Windows command file to show L.Rodriguez, C.Gonzalez and S.Johnson falling victim to (getting blocked by) the row locks acquired by E.Fernandez:

ECHO Get blocked...

START "12.L.Rodriguez"^

"%SQLANY12%\bin32\dbisql.com"^

-c "ENG=ddd12;DBN=ddd12;UID=L.Rodriguez;PWD=SQL;CON=12.L.Rodriguez"^

UPDATE DBA.inventory SET item_count = item_count + 1 WHERE item_id = 1;

START "12.C.Gonzalez"^

"%SQLANY12%\bin32\dbisql.com"^

-c "ENG=ddd12;DBN=ddd12;UID=C.Gonzalez;PWD=SQL;CON=12.C.Gonzalez"^

UPDATE DBA.inventory SET item_count = item_count + 1 WHERE item_id = 2;

START "12.S.Johnson"^

"%SQLANY12%\bin32\dbisql.com"^

-c "ENG=ddd12;DBN=ddd12;UID=S.Johnson;PWD=SQL;CON=12.S.Johnson"^

UPDATE DBA.inventory SET item_count = item_count + 1 WHERE item_id = 3;

PAUSE

Note: WAITFOR DELAY statements aren't required for these dbisql commands because they are already prevented from terminating when the UPDATE statements are blocked by the row locks held by E.Fernandez. As soon as the E.Fernandez dbisql command terminates, however, so will all three of the other dbisql commands....and that's all there is to it, no multi-threaded application program required, just a handful of dbisql commands. Here's what the four dbisql windows look like:

You can see what's going on by running the following script in a separate dbisql session:

SELECT Number,

Name,

Userid,

BlockedOn,

LockRowID,

LockTable

FROM sa_conn_info()

ORDER BY Number;

SELECT conn_id,

conn_name,

user_id,

row_identifier,

table_name,

lock_class,

lock_duration,

lock_type

FROM sa_locks()

ORDER BY conn_id;

The first SELECT shows all the current connections, with BlockedOn = 27 for each of the three blocked connections:

The second SELECT shows all the locks currently held:

The LockRowID values in the first result set can be matched up with the row_identifier values in the second result set, to determine the following:

- C.Gonzalez is blocked by a row lock on DBA.inventory where the ROWID() = 43450369,

- L.Rodriguez is blocked by a row lock on DBA.inventory where the ROWID() = 43450368, and

- S.Johnson is blocked by a row lock on DBA.inventory where the ROWID() = 43450370.

You can copy and paste the "Locked Row Query" values from Foxhound into a dbisql session to see the rows that are locked:

SELECT * FROM DBA.inventory WHERE ROWID ( inventory ) = 43450369;

SELECT * FROM DBA.inventory WHERE ROWID ( inventory ) = 43450368;

SELECT * FROM DBA.inventory WHERE ROWID ( inventory ) = 43450370;

item_id,item_count,item_name

2,4,'Initial load.'

item_id,item_count,item_name

1,5,'Initial load.'

item_id,item_count,item_name

3,4,'Initial load.'

Here's what the blocked connections look like in a SQL Anywhere 11 database: "Write lock" instead of the new "WriteNoPK lock" in version 12:

For the record, here's what they look like in Version 10.0.1:

Foxhound doesn't show the same level of detail for Versions 10.0.0 and earlier because the information isn't available to the outside world.

That wasn't really Work Avoidance, was it?

Nooooo, certainly not... the ability to create blocked connections is necessary when the time comes to test AutoDrop, right? So it's real work, right?

Monday, May 23, 2011

Latest SQL Anywhere EBF: 11.0.1.2606

11.0.1.2606 EBF - Windows 32/64 - May 20, 2011

Current builds...

HP-UX Itanium 11.0.1.2584 EBF 07 Apr 2011

10.0.1.3777 EBF 14 Oct 2008

HP-UX PA-RISC 10.0.1.3778 EBF 16 Oct 2008

IBM AIX 12.0.1 GA Upgrade from 12.0.0 15 Mar 2011

11.0.1.2584 EBF 07 Apr 2011

Linux Itanium 10.0.1.3777 EBF 14 Oct 2008

Linux x86/x64 12.0.1.3311 EBF 01 Apr 2011

11.0.1.2584 EBF 07 Apr 2011

10.0.1.4213 EBF 28 Apr 2011

Mac OS 12.0.1.3311 EBF 30 Mar 2011

11.0.1.2449 EBF 29 Jun 2010

10.0.1.4042 EBF 01 Oct 2010

NetWare 10.0.1.4036 EBF 10 Mar 2010

Solaris SPARC 12.0.1 GA Upgrade from 12.0.0 15 Mar 2011

11.0.1.2588 EBF 19 Apr 2011

10.0.1.4157 EBF 14 Dec 2010

Solaris x64 11.0.1.2584 EBF 07 Apr 2011

10.0.1.3870 EBF 01 Apr 2009

Windows CE 10.0.1.4036 EBF 09 Mar 2010

Windows Itanium 10.0.1 GA Upgrade from 10.0.0 26 Apr 2007

Windows x86/x64 12.0.1.3324 EBF 21 Apr 2011

11.0.1.2606 EBF *** 20 May 2011

10.0.1.4213 EBF 29 Apr 2011

Friday, May 20, 2011

Do not turn off your computer

Let's say your computer is starting up, and this is the second (or third, or fourth, or grrr!) time you've seen this display:

You're getting annoyed because it sits there for several minutes, never moving off 0%, before your computer finishes rebooting.Configuring Windows Updates

0% complete

Do not turn off your computer.

Stop! Pay attention! Watch the screen!

Something is going wrong, and it will flash

just before the message disappears and your computer finishes rebooting (I can't show you the whole message because it flashes by so fast).Failure...

Reverting changes

Yup, something's going wrong with your last set of Windows updates, and it looks like it's not going to fix itself...

Yup, you need to do something... here's how:

- Go to Control Panel - Windows Update - View Update History

- Use right mouse - Copy Details to record the Status - Failed entries; for example:

Update for Windows 7 for x64-based Systems (KB2534366) Failed

Update for Windows 7 for x64-based Systems (KB2529073) Failed - Use Windows Update to apply the failed updates one at a time; you might have to reboot after each one.

Wednesday, May 18, 2011

Database Tracing In 50 Easy Steps

This is a major revision of Database Tracing In English:

Update - August 6, 2012: To read about another developer's experience, see "Email from a developer" at the bottom of this article.

- with pictures this time,

- and more details

- including steps to create a separate tracing database

- and instructions for saving the captured graphical plans in *.saplan files for sending to other people

- or posting on the SQL Anywhere Forum.

Step 1. Start your application database with -x tcpip specified. Database Tracing needs TCP/IP even if you normally use Shared Memory, and if you forget to specify -x tcpip here you will eventually get the dreaded message "ATTACH TRACING could not connect to the tracing database".

Also, don't specify the -sb 0 option. If you do specify -sb 0, or forget -x tcpip, you'll be coming back here to Step 1 and starting over.

For the purposes of this demonstration, a brand-new empty SQL Anywhere 11 database will do just fine; here's a command file to set one up:

"%SQLANY11%\bin32\dbinit.exe"^ ddd11.db "%SQLANY11%\bin32\dbspawn.exe"^ -f^ "%SQLANY11%\bin32\dbeng11.exe"^ -o dbeng11_log_ddd11.txt^ -oe dbsrv11_log_fatal_ddd11.txt^ -x tcpip^ ddd11.db "%SQLANY11%\bin32\dbisql.com" ^ -c "ENG=ddd11;DBN=ddd11;UID=dba;PWD=sql;"

Step 2. Start Sybase Central 11.

Step 3. Connect to your application database.

Step 4. See the "Mode" item appear in the menu bar.

Design Flaw 1: The "Mode" item should always appear in the menu bar so folks won't go wandering around the GUI in a fruitless search for hidden functionality. It should also be enabled, even if you're not connected to a database, because you might want to view the results from a previous tracing session and for that you don't need no steenking connection to your application database. At this point in this demo, however, it doesn't matter because you are going to use the database you connected to in Step 3.

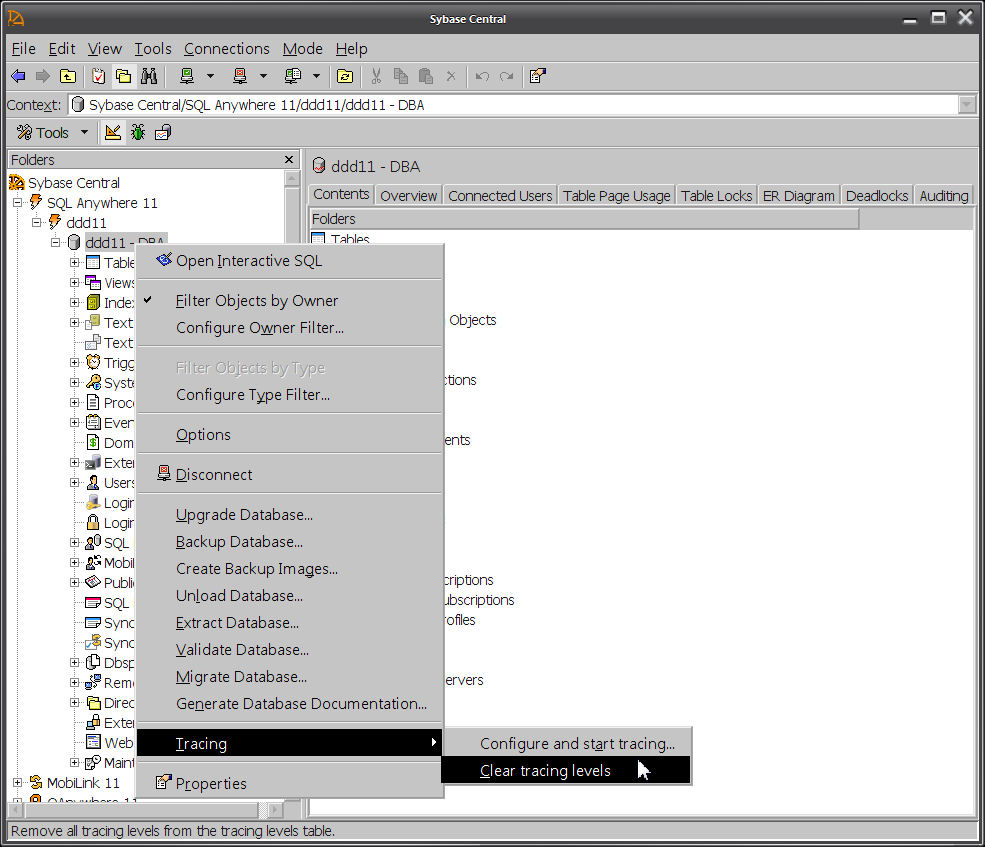

Step 5. Ignore the "Mode" item. Instead, right-click on the grey "oil drum" icon that represents your database in the left pane, and click on "Tracing..."

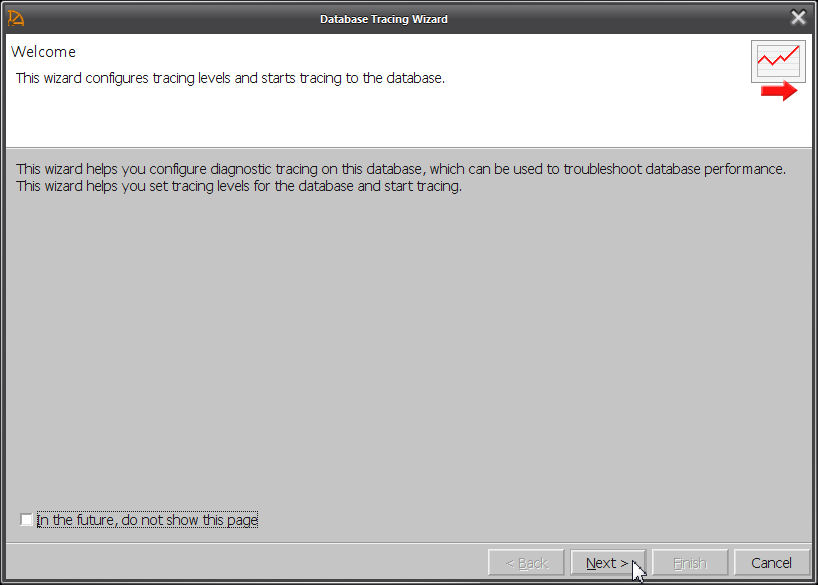

Step 6. See the "Database Tracing Wizard" window appear.

If you don't see the "Database Tracing Wizard" window appear, but you do see another menu level under "Tracing...", click on "Clear tracing levels" to get rid of any old data, then go back to Step 5 to click on "Tracing...".

Design Flaw 2: Menus should have fixed layouts without items and submenus that appear and disappear. If an item isn't available for use it should be greyed out... but not invisible... it's hard to learn how something works ifHere's what you want to see:crapstuff keeps appearing and disappearing.

Step 7. Click on "Next".

Step 8. See the "Tracing Detail Level" window appear.

Step 9. Check "Custom - select all levels manually (recomended for advanced users)".

Step 10. Click on "Next".

Step 11. See the "Edit Tracing Levels" window appear.

Step 12. Click on "New...".

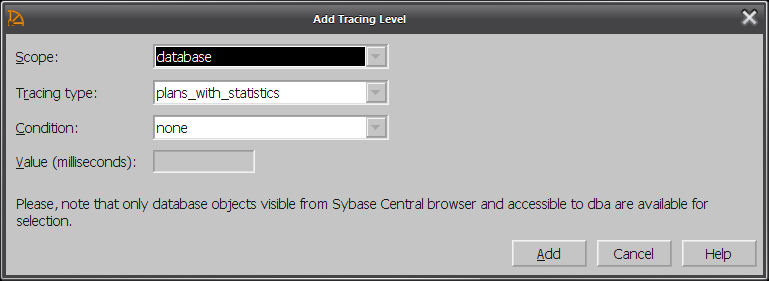

Step 13. See the "Add Tracing Level" dialog box appear.

Step 14. Choose these values (they are the defaults):

Scope: database

Tracing type: plans_with_statistics

Condition: none

Step 15. Click on "Add".

You can add other tracing levels if you want, but "plans_with_statistics" is the important one... if you don't include that one, there's not much point in continuing.

Step 16. Click on "Next".

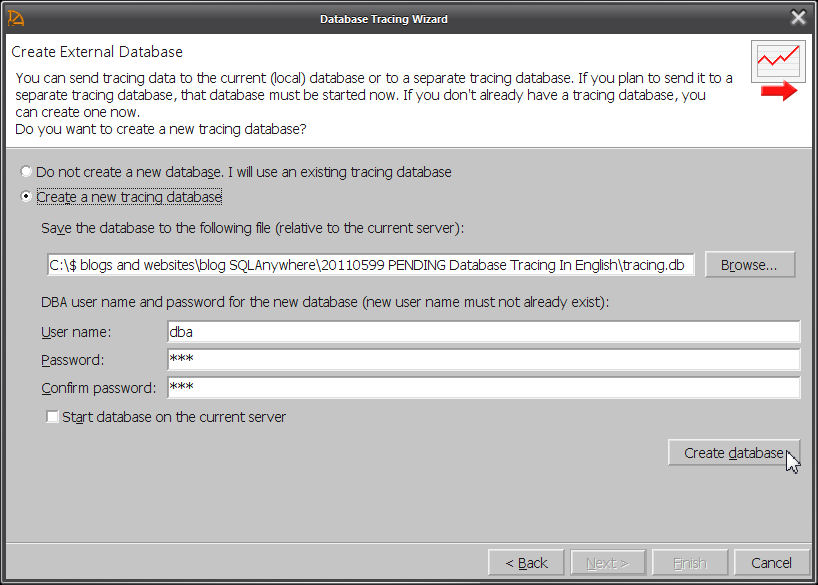

Step 17. See the "Create External Database" window appear.

Step 18. Check "Create a new tracing database".

Step 19. Provide a file specification for the tracing database; in this example it is C:\$ blogs and websites\blog SQLAnywhere\20110518 Database Tracing In xx Easy Steps\tracing.db

Step 20. Fill in these fields:

User name: dba

Password: sql

Confirm password: sql

Design Flaw 3: The on-screen exhortation "(new user name must not already exist)" should be omitted because it's confusing... nothing exists already, it's a new database.

Step 21. Click on "Create database".

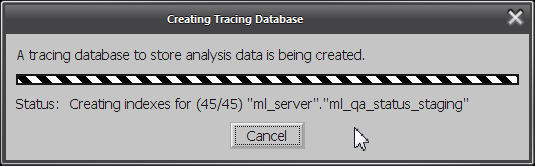

Step 22. See the "Creating Tracing Database" progress window appear and disappear.

Step 23. When control returns to the "Create External Database" window, click on "Next".

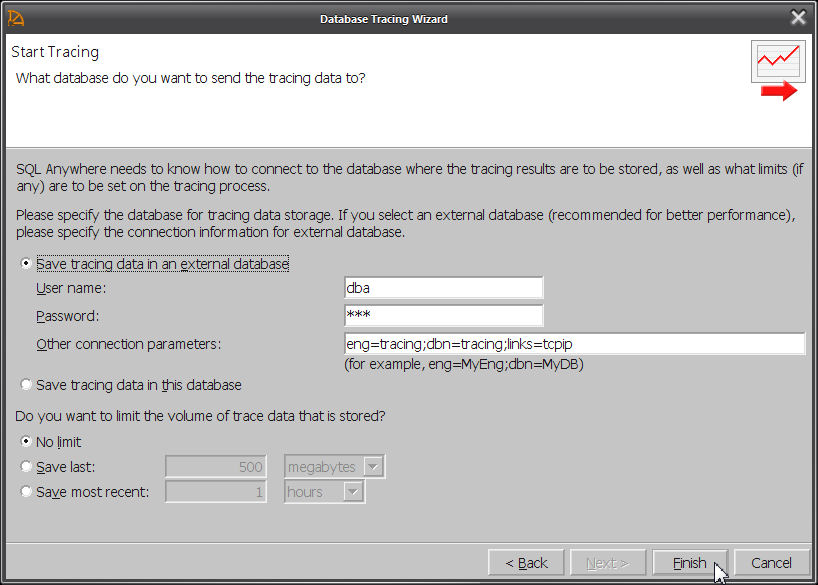

Step 24. See the "Start Tracing" window appear...

...but wait, don't click on anything yet.

Step 25. At this point, you need to start the new tracing database in an engine of its own... I think there is a way to get Sybase Central to do this for you, but I haven't figured how. The "Start Tracing" window doesn't seem to actually start the tracing database, it just starts the tracing activity by connecting to a running tracing database.

Here's a command file to start the new tracing database and an ISQL session which will be needed later:

"%SQLANY11%\bin32\dbspawn.exe"^ -f^ "%SQLANY11%\bin32\dbeng11.exe"^ -o dbeng11_log_tracing.txt^ -oe dbeng11_log_fatal_tracing.txt^ -x tcpip^ tracing.db "%SQLANY11%\bin32\dbisql.com" ^ -c "ENG=tracing;DBN=tracing;UID=dba;PWD=sql"

Step 26. Check and possibly edit the connection information on the "Start Tracing" window.

Step 27. Click on "Finish".

You might get an error at this point "ATTACH TRACING could not connect to the tracing database". If you do, try fiddling around with the connection parameters, maybe adding a LINKS=TCPIP(HOST=localhost;PORT=xxxx). Make sure you did specify -x tcpip when you started the application database in Step 1 and the tracing database in Step 25.

Oh, and if you're application database was started with -sb 0 specified, take that out and start over.

Step 28. See the Sybase Central window get control... it's almost as if nothing is happening, but it is, behind the scenes.

Have a look at the console window for your application database, you'll see these messages:

Diagnostic tracing has been started Diagnostic tracing is being sent to 'UID=dba;PWD=********;DBN=tracing;ENG=tracing;LINKS=tcpip'

Step 29. Switch over to ISQL, or your application, whatever, and run your test(s).

For the purposes of this demonstration, it's important to run the same slow-moving query at least twice. The reason for this is to show how the Database Tracing display summarizes multiple runs of the same query and requires you to drill down to individual executions of that query before displaying the plans.

Here's a query that should take some time even in an empty database; run it at least twice:

SELECT 'query 1',

SYSTABCOL.domain_id,

COUNT(*)

FROM SYSTABCOL

CROSS JOIN SYSTAB

CROSS JOIN SYSGROUP

GROUP BY SYSTABCOL.domain_id

ORDER BY SYSTABCOL.domain_id;

If that query isn't slow enough for you, try this one, but make sure you run the exact same query at least twice:SELECT 'query 2',

SYSTABCOL.domain_id,

COUNT(*)

FROM SYSTABCOL

CROSS JOIN SYSTABCOL AS b

CROSS JOIN SYSGROUP

GROUP BY SYSTABCOL.domain_id

ORDER BY SYSTABCOL.domain_id;

Step 30. When you're done running your test(s), switch back to Sybase Central.

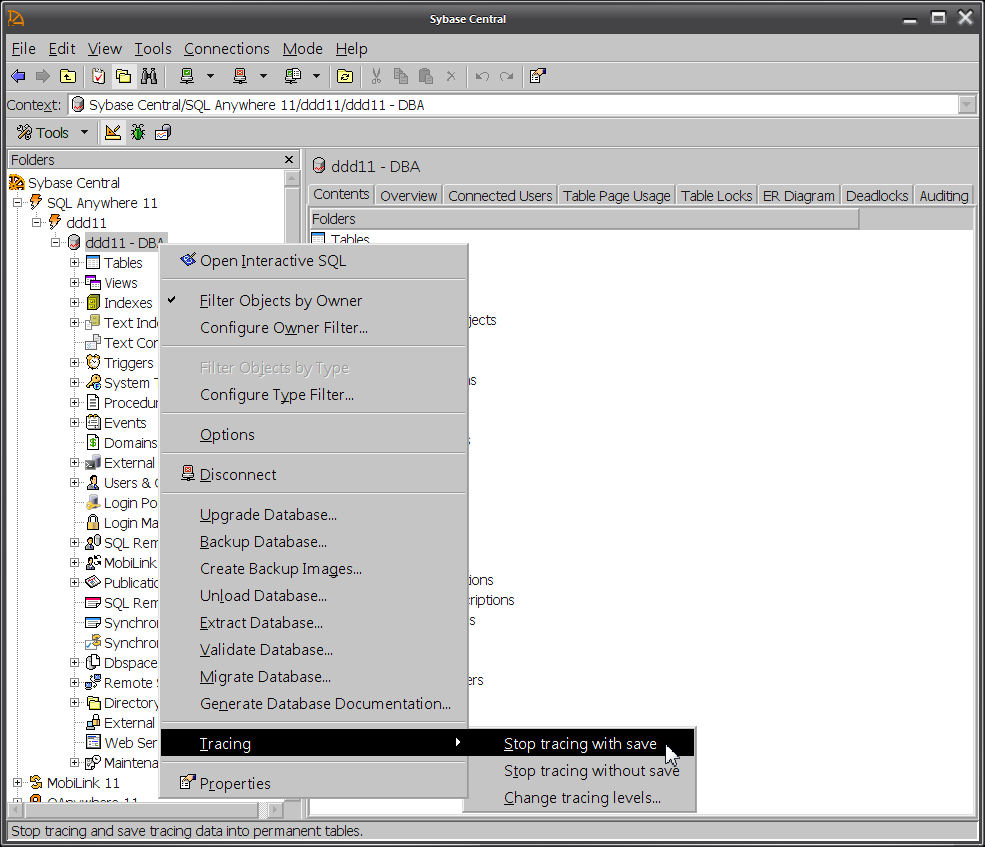

Step 31. Right-click on the database icon in the left pane, and click on "Tracing - Stop tracing with save".

Step 32. See the "Stop Tracing With Save" progress window appear and disappear.

Step 33. When control returns to Sybase Central, if you don't see the "Application Profiling" item on the menu bar, click on "Mode - Application Profiling" to get it to appear.

Design Flaw 4: The same name should never be used for two different menu items in two different locations. A whole generation of developers has been trained to believe polymorphism is a good idea but that doesn't make it true... identically named but different menu items are just confusing.

Design Flaw 5: The "Application Profiling" menu item should always be visible, and it should always be enabled. It's hard enough navigating this GUI without random changes... this is not a video game. In fact, the "Mode" menu item should probably not exist at all... modes suck, always have, always will.

Step 34. Now click on "Application Profiling", the item up on the main menu bar, and then "Open Analysis file or Connect to a Tracing Database":

Step 35. See the "Open Analysis Or Connect To Tracing Database" window appear.

Step 36. Check "In a tracing database" and click on "Open".

Step 37. See the "Connect to a Tracing Database" dialog box appear.

Step 38. Fill in the connection information for your tracing database (not your application database, but your new tracing database):

Identification tab - User ID: dba

Identification tab - Password: sql

Database tab - Server name: tracing

Database tab - Database name: tracing

Step 39. Click on "OK".

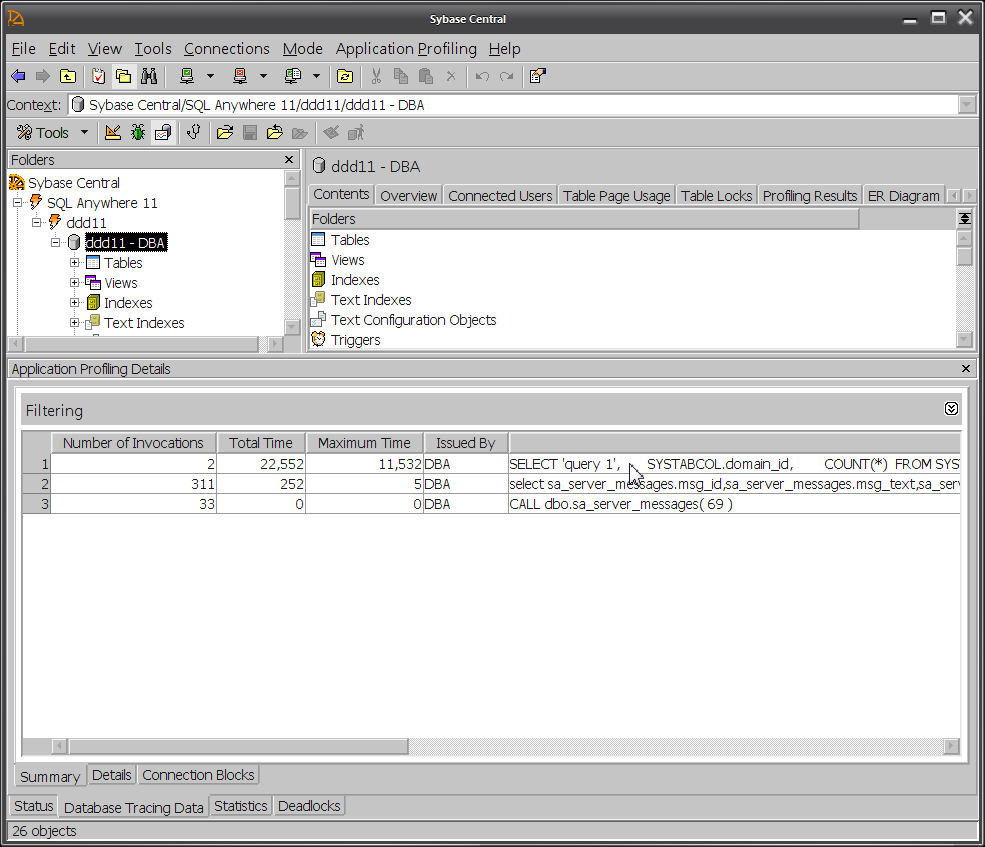

Step 40. The "Application Profiling Details" pane should appear in Sybase Central.

If it doesn't appear, try clicking on "View - 1 Application Profiling Details".

Step 41. Make a note of the "Logging session ID" value, in this case 1; later on this will make it possible to save the plan in a *.saplan file.

Step 42. Click on the "Database Tracing Data" tab at the bottom of the window to see a list of all the queries that have been captured. This is the summary view, where multiple executions of the same query appear on one line.

Step 43. Select the query you are interested in and click on right-mouse "Show the Detailed SQL Statements for the Selected Summary SQL Statement".

Step 44. See the "Details" tab showing the drill-down view of the two separate executions of the same query. This is where you have to get to before seeing the plans.

Step 45. Select one of the executions and click on right mouse - "View More SQL Statement Details for the Selected Statement"

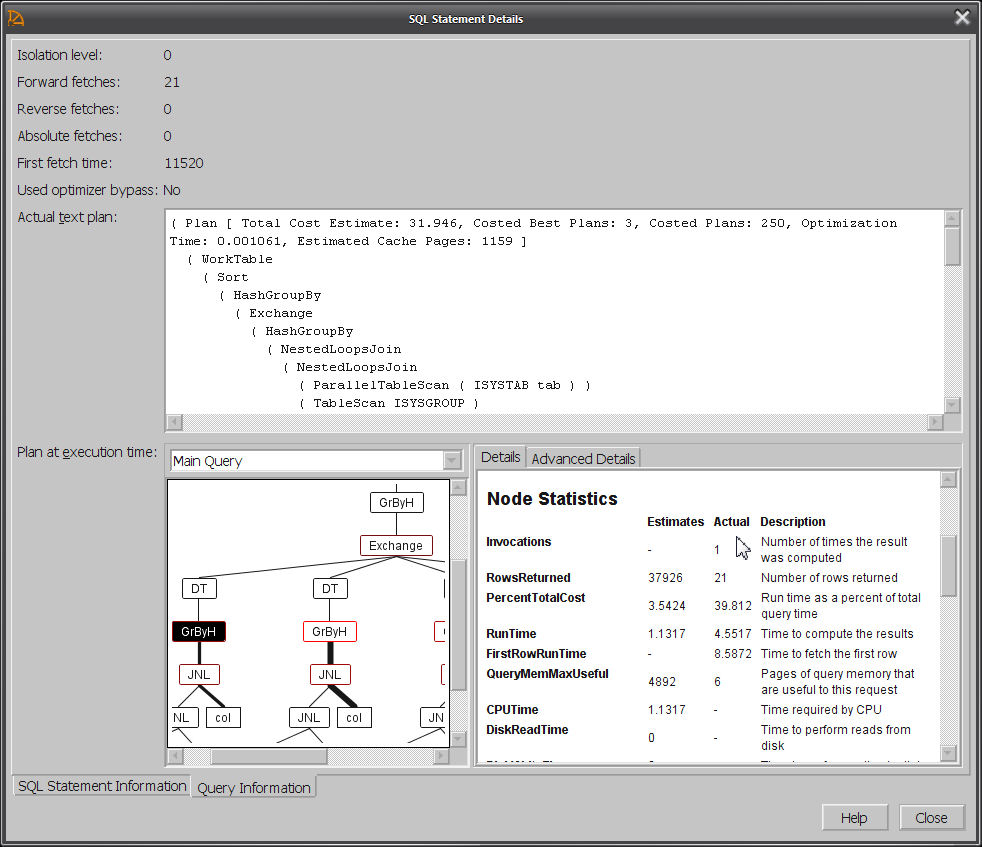

Step 46. See the "SQL Statement Details" window appear.

Step 47. Make a note of the "Request ID" value, in this case 1563; later on this will make it possible to save the plan in a *.saplan file.

Step 48. Click on the "Query Information" tab at the bottom of the "SQL Statement Details" window to see the graphical plan.

Step 49. Make sure the plan contains both "Estimates" and "Actual" values. If the actual values are missing, you probably didn't choose "plans_with_statistics" back in Step 14.

There's no maximize button on the "SQL Statement Details" window, but you can

- manually resize the window by dragging the borders, and

- manually resize the two panes showing the graphical plan by dragging the divider.

Design Flaw 6: There should be a "Save As..." button on the "Query Information" tab so you can send individual plans to other people. There should also be a "Hide SQL" button. In fact, it's not clear why the ISQL Plan Viewer isn't used here instead of a separate GUI implementation... the Plan Viewer displays all the same stuff and it has a "Save As..." button.

Roll Your Own "Save As..."

Step 50. Take the "Logging session ID" 1 from Step 41 and the "Request ID" 1563 from Step 47, plug them into this query, and run it against your tracing database:UNLOAD

SELECT sa_diagnostic_cursor.plan_xml

FROM dbo.sa_diagnostic_cursor

INNER JOIN dbo.sa_diagnostic_request

ON sa_diagnostic_request.logging_session_id = sa_diagnostic_cursor.logging_session_id

AND sa_diagnostic_request.cursor_id = sa_diagnostic_cursor.cursor_id

WHERE sa_diagnostic_request.logging_session_id = 1

AND sa_diagnostic_request.request_id = 1563

TO 'c:\temp\plan_1563.saplan'

DELIMITED BY ''

ESCAPES OFF

HEXADECIMAL OFF

QUOTES OFF;

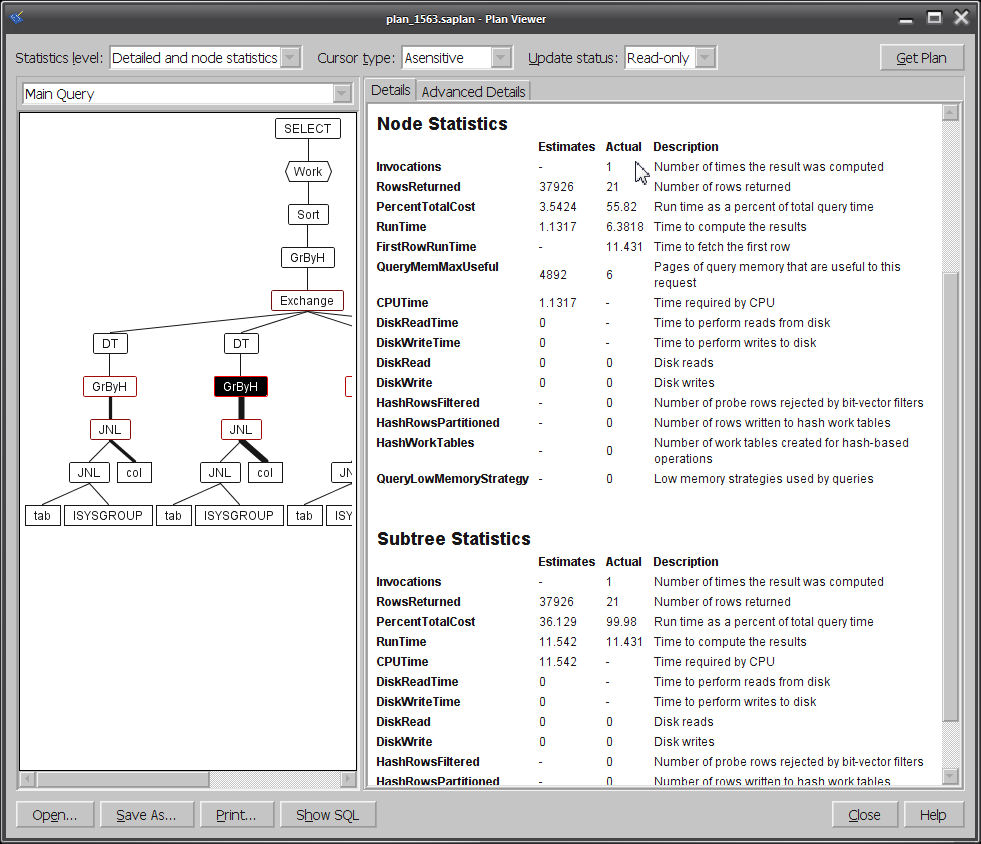

The result will be the graphical *.saplan file you can send to other people, and they can open in ISQL Plan Viewer via ISQL - File - Open:

Make sure non-zero numbers appear in the "Actual" column; if they're all zero, it might be because the UNLOAD statement in Step 50 specified the wrong table for the plan_xml column.

And that's it! ...except for some leftovers:

Design Flaw 7: Better, more informative error messages should be produced, rather than "ATTACH TRACING could not connect to the tracing database".

Design Flaw 8: It shouldn't require 50 steps to navigate through Database Tracing on your way to a graphical plan.

Design Flaw 9: The ISQL File - Open dialog box should include "Graphical Plan (*.saplan)" in the file type drop down, along with the current choices "SQL Statements (*.sql)" and "All Files (*.*)".

Database Tracing Schema

Here's the schema for some of the interesting tables in the tracing database, as displayed by Foxhound when you check "Include system tables":-- dbo.sa_diagnostic_statement (table_id 659) in tracing - May 17 2011 9:38:19AM - Print - Foxhound © 2011 RisingRoad

CREATE TABLE dbo.sa_diagnostic_statement ( -- 37 rows, 12k total = 4k table + 0 ext + 8k index

logging_session_id /* PK */ UNSIGNED INT NOT NULL,

statement_id /* PK */ UNSIGNED BIGINT NOT NULL,

database_object UNSIGNED BIGINT NULL,

line_number UNSIGNED SMALLINT NULL,

signature UNSIGNED INT NULL,

statement_text LONG VARCHAR NOT NULL,

CONSTRAINT ASA33 PRIMARY KEY ( -- 8k

logging_session_id,

statement_id )

);

-- Children

-- dbo.sa_diagnostic_query

-- dbo.sa_diagnostic_request

-- dbo.sa_diagnostic_query (table_id 660) in tracing - May 17 2011 9:38:49AM - Print - Foxhound © 2011 RisingRoad

CREATE TABLE dbo.sa_diagnostic_query ( -- 18 rows, 700k total = 8k table + 676k ext + 16k index, 39,823 bytes per row

logging_session_id /* PK FK */ UNSIGNED INT NOT NULL,

query_id /* PK */ UNSIGNED BIGINT NOT NULL,

statement_id /* FK */ UNSIGNED BIGINT NOT NULL,

user_object_id UNSIGNED BIGINT NOT NULL,

start_time TIMESTAMP NOT NULL,

cache_size_bytes UNSIGNED BIGINT NULL,

optimization_goal TINYINT NULL,

optimization_level TINYINT NULL,

user_estimates TINYINT NULL,

optimization_workload TINYINT NULL,

available_requests TINYINT NULL,

active_requests TINYINT NULL,

max_tasks TINYINT NULL,

used_bypass TINYINT NULL,

estimated_cost_ms UNSIGNED INT NULL,

plan_explain LONG VARCHAR NULL,

plan_xml LONG VARCHAR NULL,

sql_rewritten LONG VARCHAR NULL,

CONSTRAINT ASA34 PRIMARY KEY ( -- 8k

logging_session_id,

query_id )

);

-- Parents of dbo.sa_diagnostic_query

-- dbo.sa_diagnostic_statement

-- Children

-- dbo.sa_diagnostic_cursor

-- dbo.sa_diagnostic_optblock

-- dbo.sa_diagnostic_optrewrite

-- dbo.sa_diagnostic_request

-- dbo.sa_diagnostic_cursor (table_id 661) in tracing - May 17 2011 9:39:23AM - Print - Foxhound © 2011 RisingRoad

CREATE TABLE dbo.sa_diagnostic_cursor ( -- 138 rows, 716k total = 8k table + 692k ext + 16k index, 5,313 bytes per row

logging_session_id /* PK FK */ UNSIGNED INT NOT NULL,

cursor_id /* PK */ UNSIGNED BIGINT NOT NULL,

query_id /* FK */ UNSIGNED BIGINT NOT NULL,

isolation_level TINYINT NULL,

flags UNSIGNED INT NULL,

forward_fetches UNSIGNED INT NULL,

reverse_fetches UNSIGNED INT NULL,

absolute_fetches UNSIGNED INT NULL,

first_fetch_time_ms UNSIGNED INT NULL,

total_fetch_time_ms UNSIGNED INT NULL,

plan_xml LONG VARCHAR NULL,

CONSTRAINT ASA35 PRIMARY KEY ( -- 8k

logging_session_id,

cursor_id )

);

-- Parents of dbo.sa_diagnostic_cursor

-- dbo.sa_diagnostic_query

-- Children

-- dbo.sa_diagnostic_blocking

-- dbo.sa_diagnostic_request

-- dbo.sa_diagnostic_request (table_id 662) in tracing - May 17 2011 9:39:50AM - Print - Foxhound © 2011 RisingRoad

CREATE TABLE dbo.sa_diagnostic_request ( -- 1,161 rows, 120k total = 64k table + 0 ext + 56k index, 106 bytes per row

logging_session_id /* PK FK */ UNSIGNED INT NOT NULL,

request_id /* PK */ UNSIGNED BIGINT NOT NULL,

start_time TIMESTAMP NOT NULL,

finish_time TIMESTAMP NOT NULL,

duration_ms UNSIGNED INT NOT NULL,

connection_number /* FK */ UNSIGNED INT NULL,

request_type UNSIGNED SMALLINT NOT NULL,

statement_id /* FK */ UNSIGNED BIGINT NULL,

query_id /* FK */ UNSIGNED BIGINT NULL,

cursor_id /* FK */ UNSIGNED BIGINT NULL,

sql_code SMALLINT NULL,

CONSTRAINT ASA36 PRIMARY KEY ( -- 16k

logging_session_id,

request_id )

);

-- Parents of dbo.sa_diagnostic_request

-- dbo.sa_diagnostic_connection

-- dbo.sa_diagnostic_cursor

-- dbo.sa_diagnostic_query

-- dbo.sa_diagnostic_statement

-- Children

-- dbo.sa_diagnostic_blocking

-- dbo.sa_diagnostic_hostvariable

Email from a developer

August 6, 2012

Breck;

Thanks for the info -- I believe some of this may be of interest as potential additions to your wonderful instructions.

Setting up a tcpip database connection as a Sybase Central connection profile (in this case to localhost 127.0.0.1 using Windows 7 and SQL Anywhere 12); localhost was chosen because it permits me to trace without an internet connection and I am and will not be network sharing the development version of this database.

I believe you mentioned this should be possible but chose other alternatives.

These connections as I have used them only appear to support one database per server and must use different port numbers and should have unique server names:

I completed the identification tab as follows (only tab used)

for the database to be traced (-x tcpip)

Authentication: Database

UserID: {userid}

Password: {password}

Action: Start and connect to a database on this computer

Database File: {?:\\???\\database.db}

Encryption Key: {dbkey if used}

Database Name: {database.db}

Server Name: {database}

Start Line: "dbeng12 -x tcpip(MyIP=127.0.0.1;ServerPort=2638)"for the tracing database (also -x tcpip / the port choice may not be desirable but it works for me now anyway)

Authentication: Database

UserID: {userid}

Password: {password}

Action: Start and connect to a database on this computer

Database File: {?:\\???\\tracing.db}

Encryption Key: {dbkey if used}

Database Name: {tracing.db}

Server Name: {database_tracing}

Start Line: "dbeng12 -x tcpip(MyIP=127.0.0.1;ServerPort=2639)"Attempts to create an encrypted tracing database had issues as follows:

ISQL gave me an error for an invalid character near "-" (largely useless to me for debugging with all the "-"s in the command)

DBUNLOAD -c ... -an ... -et -ea AES256 -ek {dbkey if used} -n -k -kdSo I ran DBUNLOAD -c ... -n -k -kd from a command prompt and used reload.sql to populate a manually created database (AES256 WITH KEY)

Where "UID={userid);PWD={password};Server={database};DBN={database.db};DBKEY={dbkey if used};HOST=127.0.0.1:2638"

I believe allowed me to connect to an existing server and open database.

When I entered trace on the oil can both the database.db and tracing.db were already open in Sybase Central and I entered the following into the Save tracing data to an external database entries:

UserID: {userid}

Password: {password}

Other connection parameters: Server={database_tracing};DBN={tracing.db};DBKEY={dbkey if used};HOST=127.0.0.1:2639I have successfully used the tracing database following these instructions.

I hope this information is of interest and if worthy incorporated into your valuable instructions.

I also documented this to remember the "Save tracing data to an external database entries" because they are not completely obvious when you get there and they are not "sticky" either when trace is subsequently restarted.

Thanks

Monday, May 16, 2011

UNLOAD SELECT BULK INSERT

Question: How do I implement high-speed automated data transfer from SQL Anywhere to Microsoft SQL Server?

Answer: Nothing beats SQL Anywhere's UNLOAD statement for speed, and on the SQL Server side it's BULK INSERT. Throw in SQL Anywhere's FORWARD TO statement, and some special characters to keep SQL Server from misinterpreting other special characters in the data as field and row delimiters, and you're on your way to a solution.

Here is a sample of the three statements used to transfer all the rows from the DBA.t1 table to test.user1.t1; the first character in the DELIMITED BY, FIELDTERMINATOR and ROWTERMINATOR clauses may not be visible because it's the hexadecimal 1F character:

UNLOAD

SELECT *,

'^&*('

FROM "DBA"."t1"

TO 'D:\\data\\MSSTEST\\t1.TXT'

DELIMITED BY ' '

ESCAPES OFF

QUOTES OFF;

FORWARD TO MSS

'TRUNCATE TABLE test.user1.t1';

FORWARD TO MSS

'BULK INSERT test.user1.t1

FROM ''D:\\data\\MSSTEST\\t1.TXT''

WITH ( BATCHSIZE = 10000,

TABLOCK,

FIELDTERMINATOR = '' '',

ROWTERMINATOR = '' ^&*(\\n'' )';

The syntax of BULK INSERT is rather funky, especially when dealing with string columns containing line breaks and other special characters. I've had good luck with hex 1F as the MSS BULK INSERT field terminator, and hex 1F followed by ^&*( followed by \x0d\x0a as the row terminator.

The hex 1F character was chosen as something you're *really* unlikely to find inside text, and the ^&*( was chosen to for the same reason. Note that SQL Anywhere's DELIMITED BY is a separator, not a terminator, but the extra column in SELECT *, '^&*(' effectively makes it a terminator. Also, UNLOAD SELECT appends \x0d\x0a to each output line... in MSS-speak that is coded as \n.

Here's an end-to-end demonstration, starting with the download location for SQL Server:

Microsoft SQL Server 2008 Express Edition Service Pack 1Here's a command line for starting SQL Server's version of Interactive SQL, the osql.exe utility, using the -S server name sssss and -P system administrator password ppppp you specify when you install SQL Server:

"C:\Program Files\Microsoft SQL Server\100\Tools\Binn\osql.exe" -S sssss -U sa -P ppppp

Here are the SQL Server statements to create a test database and user id:

USE master GO CREATE DATABASE test ON ( NAME = test_dat, FILENAME = 'D:\data\MSSTEST\MSSTEST.mdf', SIZE = 200MB ) LOG ON ( NAME = 'test_log', FILENAME = 'D:\data\MSSTEST\MSSTEST.ldf', SIZE = 100MB ) GO USE master GO sp_addlogin @loginame = 'user1', @passwd = 'sql', @defdb = 'test' GO USE test GO sp_grantdbaccess @loginame = 'user1' GO GRANT CONTROL TO user1; GO

Now you can switch to using user1 with osql.exe:

"C:\Program Files\Microsoft SQL Server\100\Tools\Binn\osql.exe" -S sssss -U user1 -P sql

Here are the SQL Server statements for creating the target tables:

USE test GO CREATE TABLE user1.t1 ( pkey INTEGER NOT NULL PRIMARY KEY, data VARCHAR ( 1000 ) NOT NULL ) GO CREATE TABLE user1.t2 ( pkey INTEGER NOT NULL PRIMARY KEY, data VARCHAR ( 1000 ) NOT NULL ) GO

Here's how to set up the source data on SQL Anywhere, including string values with embedded line breaks:

CREATE TABLE t1 ( pkey INTEGER NOT NULL PRIMARY KEY, data VARCHAR ( 1000 ) NOT NULL ); CREATE TABLE t2 ( pkey INTEGER NOT NULL PRIMARY KEY, data VARCHAR ( 1000 ) NOT NULL ); INSERT t1 VALUES ( 1, 'Hello World' ); INSERT t1 VALUES ( 2, 'More lines of text' ); INSERT t2 VALUES ( 1, 'Hello World' ); INSERT t2 VALUES ( 2, 'More lines of text' ); COMMIT; CHECKPOINT; -- force row counts in SYSTABLE.count to be up-to-date

Here's the code you can run on SQL Anywhere to rapidly push all the data from the DBA.* tables over to SQL Server, replacing what's there:

BEGIN

DECLARE @path_to_data_files LONG VARCHAR;

DECLARE @SQLA_user_id VARCHAR ( 100 );

DECLARE @SQLA_field_delimiter VARCHAR ( 1 );

DECLARE @SQLA_row_delimiter VARCHAR ( 10 );

DECLARE @MSS_field_delimiter VARCHAR ( 1 );

DECLARE @MSS_row_delimiter VARCHAR ( 10 );

DECLARE @MSS_DSN VARCHAR ( 100 );

DECLARE @MSS_login_id VARCHAR ( 100 );

DECLARE @MSS_password VARCHAR ( 100 );

DECLARE @MSS_database VARCHAR ( 100 );

DECLARE @MSS_owner VARCHAR ( 100 );

DECLARE @sql LONG VARCHAR;

DECLARE @message LONG VARCHAR;

DECLARE @sqlcode VARCHAR ( 5 );

DECLARE @errormsg VARCHAR ( 32767 );

DECLARE @all_done VARCHAR ( 1 );

DECLARE @attempt_counter INTEGER;

DECLARE @failure_count INTEGER;

DECLARE LOCAL TEMPORARY TABLE SQLA_table (

table_name VARCHAR ( 128 ) NOT NULL,

row_count UNSIGNED BIGINT NOT NULL,

done VARCHAR ( 1 ),

CONSTRAINT PRIMARY KEY ( table_name ) )

NOT TRANSACTIONAL;

SET @message = STRING ( '********** Starting **********' );

MESSAGE @message TO CLIENT;

MESSAGE @message TO CONSOLE;

SET TEMPORARY OPTION CLOSE_ON_ENDTRANS = 'OFF';

SET @path_to_data_files = 'D:\\data\\MSSTEST';

SET @SQLA_user_id = 'DBA';

SET @SQLA_field_delimiter = '\x1F'; -- ASCII "UNIT SEPARATOR"; see http://www.cs.tut.fi/~jkorpela/chars/c0.html

--SET @SQLA_field_delimiter = 'ï'; -- Fails with UTF database

--SET @SQLA_field_delimiter = '\xEF'; -- Fails with UTF database

SET @SQLA_row_delimiter = '^&*(';

SET @MSS_field_delimiter = @SQLA_field_delimiter;

SET @MSS_row_delimiter = STRING ( @SQLA_field_delimiter, @SQLA_row_delimiter, '\\n' );

SET @MSS_DSN = 'MSSTEST';

SET @MSS_login_id = 'user1';

SET @MSS_password = 'sql';

SET @MSS_database = 'test';

SET @MSS_owner = 'user1';

BEGIN

DROP EXTERNLOGIN DBA TO MSS;

EXCEPTION WHEN OTHERS THEN

END;

BEGIN

DROP SERVER MSS;

EXCEPTION WHEN OTHERS THEN

END;

SET @sql = STRING (

'CREATE SERVER MSS CLASS ''MSSODBC'' USING ''',

@MSS_DSN,

'''' );

MESSAGE @sql TO CONSOLE;

EXECUTE IMMEDIATE @sql;

SET @sql = STRING ( 'CREATE EXTERNLOGIN DBA TO MSS REMOTE LOGIN ',

@MSS_login_id,

' IDENTIFIED BY ',

@MSS_password );

MESSAGE @sql TO CONSOLE;

EXECUTE IMMEDIATE @sql;

----------------------------------------------------------------------------------

-- UNLOAD all non-empty xxx tables.

SET @message = STRING ( '********** Step 1 - UNLOAD **********' );

MESSAGE @message TO CLIENT;

MESSAGE @message TO CONSOLE;

FOR f_fetch1

AS c_fetch1 NO SCROLL CURSOR FOR

SELECT

SYSTABLE.table_name AS @table_name,

SYSTABLE.count AS @row_count

FROM SYSTABLE

WHERE USER_NAME ( SYSTABLE.creator ) = @SQLA_user_id

AND SYSTABLE.count > 0

ORDER BY SYSTABLE.table_name

FOR READ ONLY

DO

SET @message = STRING (

'SQL Anywhere UNLOAD SELECT ',

@row_count,

'\x09 rows from ',

@table_name );

MESSAGE @message TO CLIENT;

MESSAGE @message TO CONSOLE;

-- Template...

-- UNLOAD SELECT *, '^&*(' FROM "uuu"."ttt" TO 'fff' DELIMITED BY '\x1F' ESCAPES OFF QUOTES OFF

SET @sql = STRING (

'UNLOAD SELECT *, ''',

@SQLA_row_delimiter,

''' FROM "',

@SQLA_user_id,

'"."',

@table_name,

'" TO ''',

@path_to_data_files,

'\\',

@table_name,

'.TXT'' DELIMITED BY ''',

@SQLA_field_delimiter,

''' ESCAPES OFF QUOTES OFF' );

SET @sql = REPLACE ( @sql, '\\', '\\\\' );

MESSAGE @sql TO CLIENT;

MESSAGE @sql TO CONSOLE;

/* Sample command...

UNLOAD SELECT *, '^&*(' FROM "DBA"."t1" TO 'D:\\data\\MSSTEST\\t1.TXT' DELIMITED BY ' ' ESCAPES OFF QUOTES OFF

*/

EXECUTE IMMEDIATE @sql;

/*

-- Artificial delay in case file system needs to catch up.

COMMIT;

WAITFOR DELAY '00:00:01';

*/

COMMIT;

END FOR;

----------------------------------------------------------------------------------

-- TRUNCATE all MSS tables, empty or not.

SET @message = STRING ( '********** Step 2 - TRUNCATE **********' );

MESSAGE @message TO CLIENT;

MESSAGE @message TO CONSOLE;

FOR f_fetch2

AS c_fetch2 NO SCROLL CURSOR FOR

SELECT

SYSTABLE.table_name AS @table_name,

SYSTABLE.count AS @row_count

FROM SYSTABLE

WHERE USER_NAME ( SYSTABLE.creator ) = @SQLA_user_id

ORDER BY SYSTABLE.table_name

FOR READ ONLY

DO

SET @message = STRING (

'MSS TRUNCATE TABLE ',

@table_name );

MESSAGE @message TO CLIENT;

MESSAGE @message TO CONSOLE;

-- Template...

-- FORWARD TO MSS 'TRUNCATE TABLE ddd.xxx.ttt'

SET @sql = STRING (

'FORWARD TO MSS ''TRUNCATE TABLE ',

@MSS_database,

'.',

@MSS_owner,

'.',

@table_name,

'''' );

SET @sql = REPLACE ( @sql, '\\', '\\\\' );

MESSAGE @sql TO CLIENT;

MESSAGE @sql TO CONSOLE;

/* Sample command...

FORWARD TO MSS 'TRUNCATE TABLE test.user1.t2'

*/

BEGIN

EXECUTE IMMEDIATE @sql; -- this will work, or jump to the EXCEPTION

-- Note: The TRUNCATE is not expected to fail.

EXCEPTION

-- Record exception, but otherwise carry on.

WHEN OTHERS THEN

SELECT SQLCODE, ERRORMSG() INTO @sqlcode, @errormsg;

SET @message = STRING (

' EXCEPTION in TRUNCATE: @sqlcode = "',

@sqlcode,

'", "',

' @errormsg = "',

@errormsg,

'"' );

MESSAGE @message TO CLIENT;

MESSAGE @message TO CONSOLE;

END;

COMMIT;

END FOR;

----------------------------------------------------------------------------------

-- BULK INSERT all MSS xxx tables that are non-empty on MSS.

-- Initialize list of tables to work on.

INSERT SQLA_table

SELECT SYSTABLE.table_name,

SYSTABLE.count,

'N'

FROM SYSTABLE

WHERE USER_NAME ( SYSTABLE.creator ) = @SQLA_user_id

AND SYSTABLE.table_type = 'BASE';

SET @all_done = 'N';

SET @attempt_counter = 0;

WHILE @all_done = 'N' LOOP

SET @attempt_counter = @attempt_counter + 1;

SET @message = STRING ( '********** Step 3 - BULK INSERT Attempt ', @attempt_counter, ' **********' );

MESSAGE @message TO CLIENT;

MESSAGE @message TO CONSOLE;

SET @all_done = 'Y'; -- assume this attempt will be successful, until proven otherwise

FOR f_fetch3

AS c_fetch3 NO SCROLL CURSOR FOR

SELECT

SQLA_table.table_name AS @table_name,

SQLA_table.row_count AS @row_count

FROM SQLA_table

WHERE SQLA_table.row_count > 0

AND SQLA_table.done = 'N'

ORDER BY SQLA_table.table_name

FOR READ ONLY

DO

SET @message = STRING (

'Attempt #',

@attempt_counter,

' MSS BULK INSERT ',

@row_count,

'\x09 rows into ',

@table_name );

MESSAGE @message TO CLIENT;

MESSAGE @message TO CONSOLE;

-- BULK INSERT ddd.xxx.ttt FROM 'fff' WITH ( BATCHSIZE = 10000, TABLOCK, FIELDTERMINATOR = 'ï', ROWTERMINATOR = 'ï^&*(\n' )

SET @sql = STRING (

'FORWARD TO MSS ''BULK INSERT ',

@MSS_database,

'.',

@MSS_owner,

'.',

@table_name,

' FROM ''''',

@path_to_data_files,

'\\',

@table_name,

'.TXT'''' WITH ( BATCHSIZE = 10000, TABLOCK, FIELDTERMINATOR = ''''',

@MSS_field_delimiter,

''''', ROWTERMINATOR = ''''',

@MSS_row_delimiter,

''''' )''' );

SET @sql = REPLACE ( @sql, '\\', '\\\\' );

MESSAGE @sql TO CLIENT;

MESSAGE @sql TO CONSOLE;

/* Sample command...

FORWARD TO MSS 'BULK INSERT ddd.xxx.ttt FROM ''C:\\Clients\\ddd\\05_db\\data\\ttt.TXT'' WITH ( BATCHSIZE = 10000, TABLOCK, FIELDTERMINATOR = '' '', ROWTERMINATOR = '' ^&*(\\n'' )'

*/

BEGIN

EXECUTE IMMEDIATE @sql; -- this will work, or jump to the EXCEPTION

-- It worked, so mark this table.

UPDATE SQLA_table

SET done = 'Y'

WHERE table_name = @table_name;

-- Note: The BULK INSERT may fail with a "file not found".

EXCEPTION

-- It did not work, so carry on.

WHEN OTHERS THEN

SELECT SQLCODE, ERRORMSG() INTO @sqlcode, @errormsg;

SET @all_done = 'N'; -- at least one exception has been detected

SET @message = STRING (

' EXCEPTION in BULK INSERT: @sqlcode = "',

@sqlcode,

'", "',

' @errormsg = "',

@errormsg,

'"' );

MESSAGE @message TO CLIENT;

MESSAGE @message TO CONSOLE;

END;

COMMIT;

END FOR;

IF @attempt_counter >= 3 THEN

SET @all_done = 'Y' -- no more attempts

END IF;

END LOOP;

----------------------------------------------------------------------------------

SET @message = STRING ( '********** Step 4 - Final Check **********' );

MESSAGE @message TO CLIENT;

MESSAGE @message TO CONSOLE;

SELECT COUNT(*)

INTO @failure_count

FROM SQLA_table

WHERE SQLA_table.row_count > 0

AND SQLA_table.done = 'N';

SET @failure_count = COALESCE ( @failure_count, 0 );

IF @failure_count = 0 THEN

SET @message = 'All OK';

MESSAGE @message TO CLIENT;

MESSAGE @message TO CONSOLE;

ELSE

FOR f_fetch4

AS c_fetch4 NO SCROLL CURSOR FOR

SELECT SQLA_table.table_name AS @table_name,

SQLA_table.row_count AS @row_count

FROM SQLA_table

WHERE SQLA_table.row_count > 0

AND SQLA_table.done = 'N'

ORDER BY SQLA_table.table_name

FOR READ ONLY

DO

SET @message = STRING (

'Failed: MSS BULK INSERT ',

@row_count,

'\x09 rows into ',

@table_name );

MESSAGE @message TO CLIENT;

MESSAGE @message TO CONSOLE;

COMMIT;

END FOR;

END IF;

----------------------------------------------------------------------------------

COMMIT;

END;

The CREATE SERVER and CREATE EXTERNLOGIN statements on lines 61 through 73 set up a remote server inside SQL Anywhere for directly communicating with SQL Server via ODBC using the FORWARD TO statements later in the code.

EXECUTE IMMEDIATE statements are used throughout this code because variables are used to parameterize everything; e.g., DSN, user ids, delimiter strings, etc.

The FOR loop on lines 82 through 137 walks through SYSTABLE looking for tables to UNLOAD. The FOR loop on lines 146 through 209 does the same for TRUNCATE TABLE statements sent to SQL Server via FORWARD TO.

The INSERT on lines 216 through 222 loads a local table with information used later for status checking.

The WHILE loop on lines 227 through 326 sends the BULK INSERT statements to SQL Server, with the BEGIN block on lines 285 through 317 used to catch, display, record and otherwise ignore exceptions thrown by SQL Server when BULK INSERT statements fail.

The "Final Check" code on lines 328 through 372 produces the "All OK" statement shown at the end of this sample of messages from a test run:

********** Starting **********

********** Step 1 - UNLOAD **********

SQL Anywhere UNLOAD SELECT 2 rows from t1

UNLOAD SELECT *, '^&*(' FROM "DBA"."t1" TO 'D:\\data\\MSSTEST\\t1.TXT' DELIMITED BY ' ' ESCAPES OFF QUOTES OFF

SQL Anywhere UNLOAD SELECT 2 rows from t2

UNLOAD SELECT *, '^&*(' FROM "DBA"."t2" TO 'D:\\data\\MSSTEST\\t2.TXT' DELIMITED BY ' ' ESCAPES OFF QUOTES OFF

********** Step 2 - TRUNCATE **********

MSS TRUNCATE TABLE t1

FORWARD TO MSS 'TRUNCATE TABLE test.user1.t1'

MSS TRUNCATE TABLE t2

FORWARD TO MSS 'TRUNCATE TABLE test.user1.t2'

********** Step 3 - BULK INSERT Attempt 1 **********

Attempt #1 MSS BULK INSERT 2 rows into t1

FORWARD TO MSS 'BULK INSERT test.user1.t1 FROM ''D:\\data\\MSSTEST\\t1.TXT'' WITH ( BATCHSIZE = 10000, TABLOCK, FIELDTERMINATOR = '' '', ROWTERMINATOR = '' ^&*(\\n'' )'

Attempt #1 MSS BULK INSERT 2 rows into t2

FORWARD TO MSS 'BULK INSERT test.user1.t2 FROM ''D:\\data\\MSSTEST\\t2.TXT'' WITH ( BATCHSIZE = 10000, TABLOCK, FIELDTERMINATOR = '' '', ROWTERMINATOR = '' ^&*(\\n'' )'

********** Step 4 - Final Check **********

All OK

1 row(s) affected

Execution time: 6.935 seconds

Here are two queries on SQL Server that show the line breaks have been preserved:

SELECT pkey, LEFT ( data, 20 ) AS data FROM test.user1.t1 ORDER BY pkey GO SELECT pkey, LEFT ( data, 20 ) AS data FROM test.user1.t2 ORDER BY pkey GO 1> SELECT pkey, LEFT ( data, 20 ) AS data FROM test.user1.t1 ORDER BY pkey 2> GO pkey data ----------- -------------------- 1 Hello World 2 More lines of text (2 rows affected) 1> SELECT pkey, LEFT ( data, 20 ) AS data FROM test.user1.t2 ORDER BY pkey 2> GO pkey data ----------- -------------------- 1 Hello World 2 More lines of text (2 rows affected)

Friday, May 13, 2011

ClickAppStore sure sounds like a winner!

We all believe all press releases all the time, right?

OK, so that's a "no"... but here's a press release that

- is not clearly content-free,

- is not obviously full of bull

siht(at least not to me), and - does mention technology of interest to me (SQL Anywhere, MobiLink, UltraLite)...

Here are a few excerpts that got my attention:

...bringing the app store concept to the broader world of business applications for enterprise mobility.

...ClickAppStore includes hundreds of ready-to-use mobile business apps, and a unique integrated development environment (IDE) in which IT professionals can assemble business apps into end-user solutions using primarily no-coding 'drag and drop' wizards.

...deployment using a wide variety of devices including iPhone, iPad, BlackBerry Torch, Android smart-phones, Windows Mobile Devices, Panasonic ToughBooks, Windows based tablets, Ultra-mobile PCs and more.

Each Starter Kit is pre-integrated with all of the required "plumbing" for connectivity, back-office integration, server-side and its databases, as well as basic elements of the user interface (UI). Sybase's Mobile Platform (SMP) is one option for pre-integration including SQL Ultralite for device database, Mobilink for synchronization, SUP for back office integration, and Afaria for device management and file transfer. For server-side database the options include SQLServer, Oracle, and SQL Anywhere. Online and offline modes of working are fully supported, allowing users to work in areas with no reception, with automatic synchronization as connectivity is re-established. ClickAppStore also offer apps to facilitate the usage of the mobile device features inside business applications; such as the built-in camera, barcode and credit card reader.

Wednesday, May 11, 2011

Synchronizing Updated Primary and Foreign Key Values with MobiLink

Question: Can MobiLink handle cascading updates to primary and foreign key values on the consolidated database?

Answer: Yes, if you add UNIQUE constraints using the technique described in Synchronizing Updated Primary Key Values With MobiLink.

Let's start with three simple tables on the consolidated: parent, child and grandchild, with a hierarchial foreign key structure:

CREATE TABLE parent (

p1 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

last_updated TIMESTAMP NOT NULL DEFAULT TIMESTAMP,

PRIMARY KEY ( p1 ) );

CREATE TABLE child (

p1 VARCHAR ( 10 ) NOT NULL,

p2 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

last_updated TIMESTAMP NOT NULL DEFAULT TIMESTAMP,

PRIMARY KEY ( p1, p2 ),

FOREIGN KEY ( p1 )

REFERENCES parent ( p1 ) ON UPDATE CASCADE );

CREATE TABLE grandchild (

p1 VARCHAR ( 10 ) NOT NULL,

p2 VARCHAR ( 10 ) NOT NULL,

p3 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

last_updated TIMESTAMP NOT NULL DEFAULT TIMESTAMP,

PRIMARY KEY ( p1, p2, p3 ),

FOREIGN KEY ( p1, p2 )

REFERENCES child ( p1, p2 ) ON UPDATE CASCADE );

Step 1: Add new candidate key columns to each table: r1, r2 and r3, and the associated UNIQUE constraints.

CREATE TABLE parent (

p1 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

r1 BIGINT NOT NULL DEFAULT AUTOINCREMENT,

last_updated TIMESTAMP NOT NULL DEFAULT TIMESTAMP,

PRIMARY KEY ( p1 ),

UNIQUE ( r1 ) );

CREATE TABLE child (

p1 VARCHAR ( 10 ) NOT NULL,

p2 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

r1 BIGINT NOT NULL,

r2 BIGINT NOT NULL DEFAULT AUTOINCREMENT,

last_updated TIMESTAMP NOT NULL DEFAULT TIMESTAMP,

PRIMARY KEY ( p1, p2 ),

UNIQUE ( r1, r2 ),

FOREIGN KEY ( p1 )

REFERENCES parent ( p1 ) ON UPDATE CASCADE );

CREATE TABLE grandchild (

p1 VARCHAR ( 10 ) NOT NULL,

p2 VARCHAR ( 10 ) NOT NULL,

p3 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

r1 BIGINT NOT NULL,

r2 BIGINT NOT NULL,

r3 BIGINT NOT NULL DEFAULT AUTOINCREMENT,

last_updated TIMESTAMP NOT NULL DEFAULT TIMESTAMP,

PRIMARY KEY ( p1, p2, p3 ),

UNIQUE ( r1, r2, r3 ),

FOREIGN KEY ( p1, p2 )

REFERENCES child ( p1, p2 ) ON UPDATE CASCADE );

Here are the original tables on the remote, before any changes; just the primary key and data columns, and as usual the last_updated column is omitted:

CREATE TABLE parent (

p1 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

PRIMARY KEY ( p1 ) );

CREATE TABLE child (

p1 VARCHAR ( 10 ) NOT NULL,

p2 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

PRIMARY KEY ( p1, p2 ),

FOREIGN KEY ( p1 )

REFERENCES parent ( p1 ) ON UPDATE CASCADE );

CREATE TABLE grandchild (

p1 VARCHAR ( 10 ) NOT NULL,

p2 VARCHAR ( 10 ) NOT NULL,

p3 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

PRIMARY KEY ( p1, p2, p3 ),

FOREIGN KEY ( p1, p2 )

REFERENCES child ( p1, p2 ) ON UPDATE CASCADE );

Step 2: Add the new columns r1, r2 and r3 to the tables on the remote database. Give r1, r2 or r3 a GLOBAL AUTOINCREMENT default, use them as the PRIMARY KEY, and change the original PRIMARY KEY into a UNIQUE constraint.

Leave the FOREIGN KEY constraints alone, however: the target of a FOREIGN KEY constraint in a child table does not have to be the PRIMARY KEY of the parent table, it can be a UNIQUE constraint. By leaving the FOREIGN KEY constraints alone, the remote database continues to have exactly the same referential integrity rules as the consolidated database.

In other words, we're just switching the PRIMARY KEY and UNIQUE constraints on the remote database to accomodate MobiLink's insistence that primary key values must not be changed on the remote.

CREATE TABLE parent (

p1 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

r1 BIGINT NOT NULL

DEFAULT GLOBAL AUTOINCREMENT ( 1000000000 ),

UNIQUE ( p1 ),

PRIMARY KEY ( r1 ) );

CREATE TABLE child (

p1 VARCHAR ( 10 ) NOT NULL,

p2 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

r1 BIGINT NOT NULL,

r2 BIGINT NOT NULL

DEFAULT GLOBAL AUTOINCREMENT ( 1000000000 ),

UNIQUE ( p1, p2 ),

PRIMARY KEY ( r1, r2 ),

FOREIGN KEY ( p1 )

REFERENCES parent ( p1 ) ON UPDATE CASCADE );

CREATE TABLE grandchild (

p1 VARCHAR ( 10 ) NOT NULL,

p2 VARCHAR ( 10 ) NOT NULL,

p3 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

r1 BIGINT NOT NULL,

r2 BIGINT NOT NULL,

r3 BIGINT NOT NULL

DEFAULT GLOBAL AUTOINCREMENT ( 1000000000 ),

UNIQUE ( p1, p2, p3 ),

PRIMARY KEY ( r1, r2, r3 ),

FOREIGN KEY ( p1, p2 )

REFERENCES child ( p1, p2 ) ON UPDATE CASCADE );

At this point, we are free to download cascading primary key changes (p1, p2, p3) from the consolidated database to the remote database, but not changes to r1, r2 or r3. Here are some assumptions:

- values inserted into parent.r1, child.r2 and grandchild.r3 are globally unique across all databases, both consolidated and remote,

- once a row is inserted in parent, child or grandchild, the values in r1, r2 and r3 are never changed by any process anywhere,

- except for inserts and MobiLink scripts the columns r1, r2 and r3 are ignored by all processes running on the consolidated database, and

- changes to p1, p2 and p3 are initiated by application code on the consolidated database only, and are downloaded to the remote.

Tip: If you forget to switch the PRIMARY KEY and UNIQUE clauses on the remote database tables, the MobiLink client dbmlsync.exe will produce an error message like this:

E. 2011-05-05 08:16:40. SQL statement failed: (-196) Index 'parent UNIQUE (r1)' for table 'parent' would not be unique

Step 3: Code the MobiLink scripts to deal with the schema differences:

---------------------------------------------------------------------

-- Delete and add MobiLink scripts for table parent.

CALL ml_add_table_script ( 'v1', 'parent', 'upload_insert', NULL );

CALL ml_add_table_script ( 'v1', 'parent', 'upload_insert', '

INSERT parent

( p1,

data,

r1 )

VALUES ( {ml r.p1},

{ml r.data},

{ml r.r1} )' );

CALL ml_add_table_script ( 'v1', 'parent', 'upload_update', NULL );

CALL ml_add_table_script ( 'v1', 'parent', 'upload_update', '

UPDATE parent

SET data = {ml r.data}

WHERE r1 = {ml r.r1}' );

CALL ml_add_table_script ( 'v1', 'parent', 'upload_delete', NULL );

CALL ml_add_table_script ( 'v1', 'parent', 'upload_delete', '

DELETE parent

WHERE r1 = {ml r.r1}' );

CALL ml_add_table_script ( 'v1', 'parent', 'download_cursor', NULL );

CALL ml_add_table_script ( 'v1', 'parent', 'download_cursor', '

SELECT p1,

data,

r1

FROM parent

WHERE last_updated >= {ml s.last_table_download}' );

CALL ml_add_table_script ( 'v1', 'parent', 'download_delete_cursor', NULL );

CALL ml_add_table_script ( 'v1', 'parent', 'download_delete_cursor', '--{ml_ignore}' );

---------------------------------------------------------------------

-- Delete and add MobiLink scripts for table child.

CALL ml_add_table_script ( 'v1', 'child', 'upload_insert', NULL );

CALL ml_add_table_script ( 'v1', 'child', 'upload_insert', '

INSERT child

( p1,

p2,

data,

r1,

r2 )

VALUES ( {ml r.p1},

{ml r.p2},

{ml r.data},

{ml r.r1},

{ml r.r2} )' );

CALL ml_add_table_script ( 'v1', 'child', 'upload_update', NULL );

CALL ml_add_table_script ( 'v1', 'child', 'upload_update', '

UPDATE child

SET data = {ml r.data}

WHERE r1 = {ml r.r1}

AND r2 = {ml r.r2}' );

CALL ml_add_table_script ( 'v1', 'child', 'upload_delete', NULL );

CALL ml_add_table_script ( 'v1', 'child', 'upload_delete', '

DELETE child

WHERE r1 = {ml r.r1}

AND r2 = {ml r.r2}' );

CALL ml_add_table_script ( 'v1', 'child', 'download_cursor', NULL );

CALL ml_add_table_script ( 'v1', 'child', 'download_cursor', '

SELECT p1,

p2,

data,

r1,

r2

FROM child

WHERE last_updated >= {ml s.last_table_download}' );

CALL ml_add_table_script ( 'v1', 'child', 'download_delete_cursor', NULL );

CALL ml_add_table_script ( 'v1', 'child', 'download_delete_cursor', '--{ml_ignore}' );

---------------------------------------------------------------------

-- Delete and add MobiLink scripts for table grandchild.

CALL ml_add_table_script ( 'v1', 'grandchild', 'upload_insert', NULL );

CALL ml_add_table_script ( 'v1', 'grandchild', 'upload_insert', '

INSERT grandchild

( p1,

p2,

p3,

data,

r1,

r2,

r3 )

VALUES ( {ml r.p1},

{ml r.p2},

{ml r.p3},

{ml r.data},

{ml r.r1},

{ml r.r2},

{ml r.r3} )' );

CALL ml_add_table_script ( 'v1', 'grandchild', 'upload_update', NULL );

CALL ml_add_table_script ( 'v1', 'grandchild', 'upload_update', '

UPDATE grandchild

SET data = {ml r.data}

WHERE r1 = {ml r.r1}

AND r2 = {ml r.r2}

AND r3 = {ml r.r3}' );

CALL ml_add_table_script ( 'v1', 'grandchild', 'upload_delete', NULL );

CALL ml_add_table_script ( 'v1', 'grandchild', 'upload_delete', '

DELETE grandchild

WHERE r1 = {ml r.r1}

AND r2 = {ml r.r2}

AND r3 = {ml r.r3}' );

CALL ml_add_table_script ( 'v1', 'grandchild', 'download_cursor', NULL );

CALL ml_add_table_script ( 'v1', 'grandchild', 'download_cursor', '

SELECT p1,

p2,

p3,

data,

r1,

r2,

r3

FROM grandchild

WHERE last_updated >= {ml s.last_table_download}' );

CALL ml_add_table_script ( 'v1', 'grandchild', 'download_delete_cursor', NULL );

CALL ml_add_table_script ( 'v1', 'grandchild', 'download_delete_cursor', '--{ml_ignore}' );

The upload_insert and download_cursor scripts look no different than they would if p1, p2 and p3 were still the primary key columns on the remote database: all columns uploaded, all columns downloaded (except for last_updated, which is omitted from the remote database).

The upload_update scripts ignore the p1, p2 and p3 columns because they are never changed on the remote. The WHERE clauses use r1, r2 and r3 to locate the rows to be updated, and that's OK because

- r1, r2 and r3 are UNIQUE on the consolidated and

- r1, r2 and r3 are never modified on the consolidated.

Here's a demonstration; first, three rows are inserted on the consolidated database, and dbmlsync is run to download those rows to the remote:

INSERT parent ( p1, data ) VALUES ( 'a', 0 );

INSERT child ( p1, p2, data, r1 ) VALUES ( 'a', 'b', 0, 1 );

INSERT grandchild ( p1, p2, p3, data, r1, r2 ) VALUES ( 'a', 'b', 'c', 0, 1, 1 );

COMMIT;

Here's the step-by-step with expected output:

---------------------------------------------------------------------------

-- 1. Run this on the consolidated and remote database to display the data.

SELECT * FROM parent;

SELECT * FROM child;

SELECT * FROM grandchild;

/* Expected output from the consolidated database...

p1,data,r1,last_updated

'a',0,1,'2011-05-05 08:26:42.946'

p1,p2,data,r1,r2,last_updated

'a','b',0,1,1,'2011-05-05 08:26:42.967'

p1,p2,p3,data,r1,r2,r3,last_updated

'a','b','c',0,1,1,1,'2011-05-05 08:26:43.109'

*/

/* Expected output from the remote database...

p1,data,r1

'a',0,1

p1,p2,data,r1,r2

'a','b',0,1,1

p1,p2,p3,data,r1,r2,r3

'a','b','c',0,1,1,1

*/

---------------------------------------------------------------------------

-- 2. Change the primary keys on the consolidated database.

UPDATE parent

SET p1 = 'aa'

WHERE p1 = 'a';

UPDATE child

SET p2 = 'bb'

WHERE p1 = 'aa'

AND p2 = 'b';

UPDATE grandchild

SET p3 = 'cc'

WHERE p1 = 'aa'

AND p2 = 'bb'

AND p3 = 'c';

COMMIT;

---------------------------------------------------------------------------

-- 3. Run a sync to download the new primary keys.

---------------------------------------------------------------------------

-- 4. Display the new "primary keys" on the remote database.

SELECT * FROM parent;

SELECT * FROM child;

SELECT * FROM grandchild;

/* Expected output from remote...

p1,data,r1

'aa',0,1

p1,p2,data,r1,r2

'aa','bb',0,1,1

p1,p2,p3,data,r1,r2,r3

'aa','bb','cc',0,1,1,1

*/

---------------------------------------------------------------------------

-- 5. Use the new "primary keys" to modify the data on the remote database.

UPDATE parent

SET data = 2

WHERE p1 = 'aa';

UPDATE child

SET data = 2

WHERE p1 = 'aa'

AND p2 = 'bb';

UPDATE grandchild

SET data = 2

WHERE p1 = 'aa'

AND p2 = 'bb'

AND p3 = 'cc';

COMMIT;

---------------------------------------------------------------------------

-- 6. Run a sync to upload the modified data.

---------------------------------------------------------------------------

-- 7. Display the modified data on the consolidated database.

SELECT * FROM parent;

SELECT * FROM child;

SELECT * FROM grandchild;

/* Expected output from consolidated..

p1,data,r1,last_updated

'aa',2,1,'2011-05-05 08:32:09.292'

p1,p2,data,r1,r2,last_updated

'aa','bb',2,1,1,'2011-05-05 08:32:09.294'

p1,p2,p3,data,r1,r2,r3,last_updated

'aa','bb','cc',2,1,1,1,'2011-05-05 08:32:09.296'

*/

Monday, May 9, 2011

Friday, May 6, 2011

Synchronizing Updated Primary Key Values With MobiLink

Question: Can MobiLink handle changes to primary key values on the consolidated database?

Answer: I've been telling people "No!" for so many years that I've come to believe it myself:

"No! You can't change primary key values if you're synchronizing with MobiLink!"

Epiphany

A couple of days ago someone asked the question again, someone who really wanted the answer to be "Yes!", so much so that I started thinking about "Why not?" and "How?"... and that's when I had this epiphany:

The rule is actually this: You can't change primary key values on the remote database if you're synchronizing with MobiLink.MobiLink is extremely flexible, and it has been used for many years to handle schema differences between consolidated and remote databases. So what if the table on the remote database had a different column as PRIMARY KEY, and the original PRIMARY KEY column from the consolidated was defined as a UNIQUE constraint on the remote?

It Works!

Yes, you can change primary key values on the consolidated database when you're using MobiLink. Here's how it works:

Let's start with a simple table on the consolidated, one primary key column, one data column, and the usual last_updated:

CREATE TABLE t (

p1 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

last_updated TIMESTAMP NOT NULL DEFAULT TIMESTAMP,

PRIMARY KEY ( p1 ) );

Step 1: Add a new column r1 to the table on the consolidated database, and make it UNIQUE. This column will not be used by any application code on the consolidated database, it's just there for MobiLink purposes.

CREATE TABLE t (

p1 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

r1 BIGINT NOT NULL DEFAULT AUTOINCREMENT,

last_updated TIMESTAMP NOT NULL DEFAULT TIMESTAMP,

PRIMARY KEY ( p1 ),

UNIQUE ( r1 ) );

Here's the corresponding table on the remote, before any changes; just the primary key and data columns, and as usual the last_updated column is omitted:

CREATE TABLE t (

p1 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

PRIMARY KEY ( p1 ) );

Step 2: Add the new column r1 to the table on the remote database. Give r1 a GLOBAL AUTOINCREMENT default, make r1 the PRIMARY KEY, and change the original primary key column p1 into a UNIQUE constraint.

CREATE TABLE t (

p1 VARCHAR ( 10 ) NOT NULL,

data INTEGER NOT NULL DEFAULT 0,

r1 BIGINT NOT NULL

DEFAULT GLOBAL AUTOINCREMENT ( 1000000000 ),

PRIMARY KEY ( r1 ),

UNIQUE ( p1 ) );

At this point, we are free to download primary key changes (p1) from the consolidated database to the remote database, but not changes to r1. Here are some assumptions:

- values inserted into t.r1 are globally unique across all databases, both consolidated and remote,

- once a row is inserted in t the value in t.r1 is never changed by any process anywhere,

- except for inserts and MobiLink scripts the column t.r1 is ignored by all processes running on the consolidated database, and

- changes to t.p1 are initiated by application code on the consolidated database only, and are downloaded to the remote.

Step 3: Code the MobiLink scripts to deal with the schema differences:

CALL ml_add_table_script ( 'v1', 't', 'upload_insert', '

INSERT t

( p1,

data,

r1 )

VALUES ( {ml r.p1},

{ml r.data},

{ml r.r1} )' );

CALL ml_add_table_script ( 'v1', 't', 'upload_update', '

UPDATE t

SET data = {ml r.data}

WHERE r1 = {ml r.r1}' );

CALL ml_add_table_script ( 'v1', 't', 'upload_delete', '

DELETE t

WHERE r1 = {ml r.r1}' );

CALL ml_add_table_script ( 'v1', 't', 'download_cursor', '

SELECT p1,

data,

r1

FROM t

WHERE last_updated >= {ml s.last_table_download}' );

The upload_insert and download_cursor scripts look no different than they would if p1 was still the primary key on the remote database.

The upload_update script ignores the t.p1 column because it is never changed on the remote. The WHERE clause uses t.r1 to locate the row to be updated, and that's OK because

- t.r1 is UNIQUE on the consolidated and

- t.r1 is never modified on the consolidated.

Here's a demonstration; first, one row is inserted on the consolidated database, and dbmlsync is run to download that row to the remote:

INSERT t VALUES ( 'a', 0, DEFAULT, DEFAULT );

COMMIT;

Here's the step-by-step with expected output:

---------------------------------------------------------------------------

-- 1. Run this on the consolidated and remote database to display the data.

SELECT * FROM t;

/* Expected output from consolidated and remote...

p1,data,r1,last_updated

'a',0,1,'2011-05-04 11:39:55.523'

p1,data,r1

'a',0,1

*/

---------------------------------------------------------------------------

-- 2. Change the primary key on the consolidated database.

UPDATE t SET p1 = 'xxx' WHERE p1 = 'a';

COMMIT;

---------------------------------------------------------------------------

-- 3. Run a sync to download the new primary key.

---------------------------------------------------------------------------

-- 4. Display the new primary key on the remote database.

SELECT * FROM t;

/* Expected output from remote...

p1,data,r1

'xxx',0,1

*/

---------------------------------------------------------------------------

-- 5. Use the new primary key to modify the data on the remote database.

UPDATE t SET data = 1 WHERE p1 = 'xxx';

COMMIT;

---------------------------------------------------------------------------

-- 6. Run a sync to upload the modified data.

---------------------------------------------------------------------------

-- 7. Display the modified data on the consolidated database.

SELECT * FROM t;

/* Expected output from consolidated..

p1,data,r1

'xxx',1,1

*/

Wednesday, May 4, 2011

Monday, May 2, 2011

Misunderstanding SQL Anywhere

Here are five of my favorite misunderstandings about SQL Anywhere...

1. Index scans are always faster than table scans.

Not true... index scans are often faster, but not "always", not even "usually". Here's what the Help says about table scans:

"Sequential table scans are used when it is likely that most of the table pages have a row that match the query's search condition or a suitable index is not defined. Although sequential table scans may read more pages than index scans, the disk I/O can be substantially less expensive because the pages are read in contiguous blocks from the disk (this performance improvement is best if the database file is not fragmented on the disk). Sequential I/O reduces disk head movement and rotational latency. For large tables, sequential table scans also read groups of several pages at a time. This further reduces the cost of sequential table scans relative to index scans."Table access is not limited to "index scans versus table scans"; there are actually 8 different table access methods.

2. Performance is affected by your SQL coding style.

In a complex query there is an almost infinite variety of ways you can code the SQL to obtain exactly the same result set and exactly the same execution plan. Unlike most programming languages SQL syntax is very loosely coupled to the underlying semantics, making SQL clauses more like spreadsheet cells where the order and arrangement of the cells make little difference to the underlying calculations.

For an introduction to how SQL Anywhere transforms your query, see Query processing phases, in particular the 12 different semantic query transformations described here.

3. SQL Anywhere writes your data to the database when it does a commit.

A commit guarantees your changes are permanent, but it doesn't guarantee they've been written to the physical database file. In fact, no high-performance database management system makes that guarantee because for a busy database with many connections executing many commits it would crush performance by beating the disk drives to death.

Here are some snippets from Chapter 9: Protecting in SQL Anywhere Studio 9 Developer's Guide:

During execution, database data may be stored in seven different locations:The cache contains table and index pages that have been read from the database files into memory. The cache is always up to date; to be specific, logical database changes made by SQL statements are immediately reflected in the table and index pages in the cache, including both committed and uncommitted changes.

- Cache in memory

- Database files on disk, including other dbspace file

- Transaction log file on disk

- Optional mirror log file on disk

- Checkpoint log in the database file

- Rollback log in the cache and database file

- Temporary file on disk

...there is no direct correspondence between COMMIT operations and data in the physical database files; uncommitted changes may be written to the database files while committed changes may not be written immediately.

The database files are up to date after a checkpoint; to be specific, all table and index pages in the physical database files are guaranteed to be up to date with respect to data in the cache whenever a checkpoint is complete.

The transaction log file, also known as the redo log, contains a sequential record of logical changes made to the database since this log file was created. Both committed and uncommitted changes may be written to the physical transaction log file, and they may be written before a COMMIT is executed on the corresponding connection. COMMIT and CHECKPOINT operations are recorded in the transaction log as well as other changes.

The transaction log file is up to date after a COMMIT; to be specific, the changes made by a particular transaction are guaranteed to be written to the physical transaction log by the time control is returned to the connection after a COMMIT ending that transaction is successfully completed. In fact, all changes, committed or otherwise, are guaranteed to be written to the transaction log whenever a COMMIT is performed on any connection.

4. The transaction log is used for rollbacks.

Nope... the rollback log is used for rollbacks, the transaction log is used for forward recovery.

Here's another excerpt from SQL Anywhere Studio 9 Developer's Guide:

A rollback log, also known as an undo log or row level undo log, is maintained for each connection to hold a sequential record of the reverse operations that would undo the logical changes made to the database by this connection since the last COMMIT or ROLLBACK was executed on the connection.

The rollback log pages are stored in the SYSTEM dbspace database file, together with table and data pages, and they are written to the physical database file before and during a checkpoint. They are not necessarily written to the physical database file when the logical database changes are first made by SQL statements. Note that rollback log pages are included in the checkpoint log.

The rollback log is up to date after a checkpoint; to be specific, all current rollback log pages in the physical database file are guaranteed to be up to date whenever a checkpoint is completed.

The data in a rollback log may include changes made before and after a checkpoint; there is no correspondence between a checkpoint and the end of any particular transaction.

The data in a rollback log is used during normal operation to roll back the changes when a ROLLBACK is executed on the connection, and it is then discarded. It is also discarded when a COMMIT is executed on the connection.

5. SQL Anywhere saves the execution plan when it compiles your SQL query, and uses the same plan every time it executes the query.

Some other database products save execution plans in the database, but not SQL Anywhere, not ever. What SQL Anywhere does do, sometimes, is temporarily save or "cache" the plan at runtime.

Here's what the Help says about the subject:

Normally, the optimizer selects an execution plan for a query every time the query is executed. Optimizing at execution time allows the optimizer to choose a plan based on current system state, and the values of current selectivity estimates and estimates based on the values of host variables. For queries that are executed frequently, the cost of query optimization can outweigh the benefits of optimizing at execution time. To reduce the cost of optimizing these statements repeatedly, the SQL Anywhere server considers caching plans... [more]