This is Part 1 of an article written in March 2010 with the title "Downloading Data into a SQL Anywhere In-Memory Database from Microsoft SQL Server".

This is the first in what may become a series of articles presenting various techniques for downloading data into a Sybase SQL Anywhere Version 11 in-memory database from a variety of other databases, and then saving or uploading data to those other databases before the in-memory process is shut down.

This article specifically discusses downloading data from a Microsoft SQL Server 2008 database into a SQL Anywhere database that is using the "never write" mode as opposed to the "checkpoint only" mode of in-memory operation. Five techniques are presented, with actual code tested on a non-trivial set of data. The data was chosen to expose some of the quirks that may be encountered when dealing with the interface between two different database products.

This is primarily a how-to article. No conclusions are drawn about "what's best for you" because that depends on your priorities. In fact, one single application may use more than one technique for different purposes; e.g., fast in one place, flexible in another.

For an overview, read the next section Introducing The Techniques Used, and then skip ahead to the sections Performance and Breaking News!

- Part 1

- Part 2

- Technique 2: Download Via MobiLink

- Part 3

- Technique 3: Download Via Proxy Table

- Part 4

- Technique 4: Download Via Linked Server

- Part 5

- Technique 5: Download Via OPENROWSET

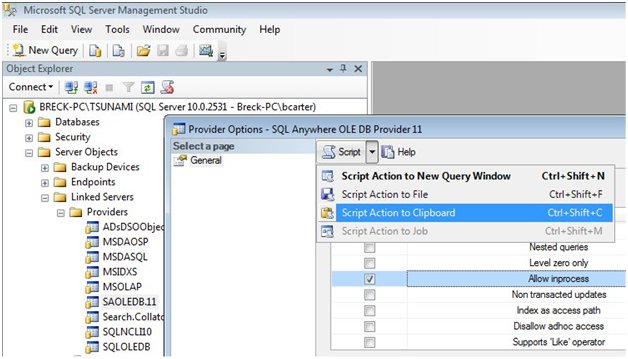

- Revisiting Techniques 4 and 5: The SAOLEDB.11 Provider

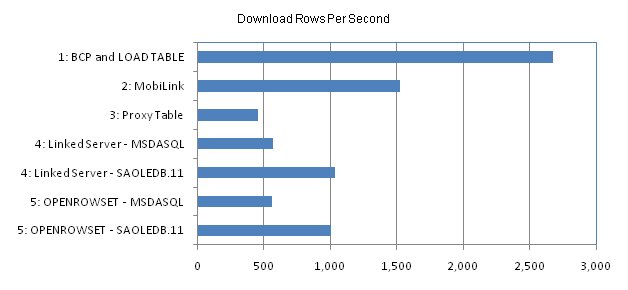

- Performance

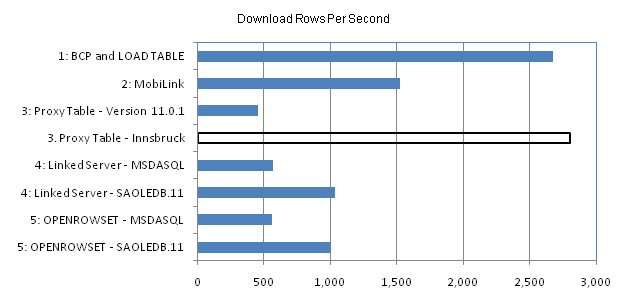

- Breaking News!

- But Wait, There's More

Introducing The Techniques Used

- Technique 1 is a two-step process: First, the high-performance SQL Server BCP utility (Bulk Copy Program) is used to unload the SQL Server source table across the LAN to an ASCII text file.

Then, SQL Anywhere's high-performance LOAD TABLE statement is used to load the data from that file into the target table in the in-memory database.

The BCP "queryout" option is used to run the simple query "SELECT * FROM main.dbo.mss_source". Special character strings are used as row and column delimiters in the text file to deal with the fact that the data itself contains special characters like tabs, commas, quotes and line breaks.

"c:\Program Files\Microsoft SQL Server\100\Tools\Binn\bcp.exe"^

"SELECT * FROM main.dbo.mss_source"^

queryout "\\PAVILION2\C\data\main\mss_source.txt"^

-c^

-t $#$\t^

-r $#$\n^

-P j68Fje9#fyu489^

-S BRECK-PC\TSUNAMI^

-U sa

1925469 rows copied.

LOAD TABLE sa_target

FROM 'c:/data/main/mss_source.txt'

DELIMITED BY '$#$\x09'

ESCAPES OFF

QUOTES OFF

ROW DELIMITED BY '$#$\x0D\x0A';

- Technique 2 uses MobiLink to perform a download-only synchronization from SQL Server to SQL Anywhere.

The MobiLink synchronization process is launched by running the MobiLink client dbmlsync.exe on the client computer, which then connects via TCP/IP to the MobiLink server running on the server computer. The MobiLink server then connects via ODBC to the SQL Server database and runs a pre-defined SQL script called a "download_cursor" (see below) to select all the rows from the mss_source table. Those rows are then sent down to the MobiLink client which inserts them into the SQL Anywhere database.

USE main

GO

EXECUTE ml_add_table_script

'v1',

'sa_target',

'download_cursor',

'SELECT * FROM mss_source'

GO

- Technique 3 uses a SQL Anywhere proxy table, also known as "Remote Data Access", to copy all the rows from the mss_source table on SQL Server to the sa_target table on SQL Anywhere.

This is a "pull" process whereby the proxy table is defined on SQL Anywhere, pointing to the table on SQL Server, and the INSERT ... SELECT statement is run on SQL Anywhere:

CREATE EXISTING TABLE proxy_mss_source

AT 'mss.main.dbo.mss_source';

INSERT sa_target

SELECT *

FROM proxy_mss_source;

- Technique 4 uses the SQL Server's Linked Server feature to "push" all the rows from SQL Server to SQL Anywhere. This is similar to Technique 3 except there's no "proxy table" defined on SQL Server; instead, the INSERT ... SELECT running on SQL Server explicitly names the SQL Anywhere table:

EXEC sp_addlinkedserver

@server = 'mem',

@srvproduct = 'xxx',

@provider = 'MSDASQL',

@datasrc = 'sa_system_dsn'

GO

INSERT INTO mem..dba.sa_target

SELECT *

FROM mss_source

GO

- Technique 5 uses SQL Server's OPENROWSET syntax to perform the same operation as Technique 4 but without having to define the linked server. The code looks vastly different but under the covers it's the same technology:

INSERT INTO OPENROWSET ( 'MSDASQL',

'sa_system_dsn'; 'dba'; 'sql',

dba.sa_target )

SELECT *

FROM mss_source

GO

- Techniques 4 and 5 are shown twice, the second time using a different OLE DB provider: the SAOLEDB.11 provider that ships with SQL Anywhere is a faster alternative to the MSDASQL provider that ships with SQL Server.

Setting Up For The Tests

This section describes the test setup in some detail. You can skip to Technique 1 if you want, and return to this section when you have questions about the setup.

This is not a benchmark performance test setup, not by any stretch of the definition of "benchmark", nor is it a comparison of SQL Anywhere with SQL Server. Every technique presented involves both products, and the performance figures are presented to help you decide among the various techniques, not decide between two products. Even then, performance is often not the only determinant; you may find one technique better than another based on other criteria, such as flexibility or simplicity, rather than performance.

Here is the hardware and software used to create the test environment.

Server computer

- Whitebox desktop with Intel Core 2 Quad Q9450 2.66Ghz 4G RAM

- Windows Vista Ultimate 64 bit build 6001 SP1

- SQL Server 2008 Enterprise Edition 64 Service Pack 1

- Host name "BRECK-PC"

- SQL Server name "TSUNAMI"

- Database name "main"

- SAOLEDB.11 provider from SQL Anywhere 11.0.1.2276

Client computer

- HP Pavilion laptop, 4GHz Pentium 4, 2G RAM

- Windows XP SP2

- SQL Anywhere 11.0.1.2276

- Hostname "PAVILION2"

- SQL Anywhere server name "mem"

- Database name "mem"

Standard setups were used to install Windows, SQL Server 2008 and SQL Anywhere 11, including all the services required to launch SQL Server. These standard setups are not described here, but everything else is, including the commands to launch the SQL Anywhere server.

For the most part text scripts are presented for both SQL statements and Windows commands, as opposed to GUI dialogs that perform equivalent tasks. Text scripts are used for two reasons: First, scripts are easier to explain because they're more concise, and script syntax tends to change less frequently than GUI layouts. Second, scripts are favored by many DBAs for implementing processes that must be repeated. GUIs are great for learning new subjects and performing one-time tasks, but scripts are often much better at automating repetitive tasks. Scripts also allow comments and they lend themselves to change control.

For the record, the equivalent GUI dialogs are available in these installed programs:

- Start - All Programs - Microsoft SQL Server 2008 - SQL Server Management Studio

- Start - All Programs - Microsoft SQL Server 2008 - Configuration Tools - SQL Server Configuration Manager

- Start - All Programs - SQL Anywhere 11 - Sybase Central

Figure 1 shows how the SQL Server command-line SQL utility was launched to execute many of the SQL scripts in this article.

Figure 1: Launch the SQL Server SQL Command UtilityContext: SQL Server

"c:\Program Files\Microsoft SQL Server\100\Tools\Binn\sqlcmd.exe"^

-d main^

-I^

-P j68Fje9#fyu489^

-S BRECK-PC\TSUNAMI^

-U sa

Line 1 in Figure 1 shows the filespec for sqlcmd.exe. According to Microsoft this is a modern replacement for the venerable osql.exe, but it works pretty much the same way. The caret "^" is the Windows command line continuation character, and it's used to make the scripts in this article easier to read and to describe.

Line 2 specifies the database to be used when first connecting to SQL Server. Unlike SQL Anywhere, with SQL Server you connect to a server and then specify which database you want to use, and you can switch databases while remaining connected. With SQL Anywhere, connections are made to a database within a server, and if you want to use a different database you must start a new connection even if it's on the same server.

Line 3 lets you use "doublequotes" around identifiers in your SQL commands, which you must do if any of them are reserved words.

Lines 4, 5 and 6 specify the password, server and userid for sqlcmd's connection to SQL Server. BRECK-PC is the host name for the server computer and TSUNAMI is the SQL Server database server name.

Figure 2 shows the SQL Server CREATE DATABASE statement used to create the source database. The data and log files are stored together on the E: drive, separate from the C: drive containing the operating system and SQL Server binaries.

Figure 2: Creating the SQL Server Source DatabaseContext: SQL Server

CREATE DATABASE main

ON PRIMARY

( NAME = main_dat,

FILENAME = 'E:\data\main\main.mdf',

SIZE = 2GB,

FILEGROWTH = 200MB )

LOG ON

( NAME = 'main_log',

FILENAME = 'E:\data\main\main.ldf',

SIZE = 2GB,

FILEGROWTH = 200MB )

GO

Line 1 in Figure 2 specifies the database name "main". Lines 2 through 6 specify where the data file resides whereas lines 7 through 11 specify where the log file goes. As far as the rest of this article is concerned only the database name "main" is important, the rest is not.

Extra disk I/O on the server computer was avoided by using Control Panel - System - System Protection - Automatic restore points to turn off Windows Vista restore points for the E: drive. Also, the SQL Server VSS Writer was disabled using Control Panel - Administrative Tools - Services for the same reason.

Some SQL Server configuration changes were necessary for some techniques; the code for these changes is shown later in this article.

Figure 3 shows the CREATE TABLE for the source table on SQL Server. This table contained 63 columns, 1,925,469 rows and approximately 1 gigabyte of statistical data gathered by an automatic database monitor process. The actual nature of the data is not as important as the fact that it comes from a real-world application, not an artificial test data generator. No claims are made, however, for the suitability of this data for benchmark purposes.

Tip: When transferring data back and forth between SQL Server and SQL Anywhere, avoid blobs. That means on the SQL Server side avoid data types like TEXT and VARCHAR ( MAX ) and on the SQL Anywhere side avoid LONG VARCHAR. In fact, in SQL Anywhere you should avoid any string defined as VARCHAR ( 8001 ) or larger; those aren't blobs in SQL Anywhere but SQL Server will treat them as such because 8000 is the limit there. The reason for this suggestion? To avoid performance problems on the SQL Server side as well as a fatal memory leak. The original table used for this article contained nine columns defined as TEXT but since the actual data didn't contain any string values longer than 144 characters it was easy to change them all to VARCHAR ( 1000 ). The fact that that helped indicates the problem lies with the data type, not the data.

Figure 3: Creating the Source TableContext: SQL Server

CREATE TABLE mss_source (

sampling_id BIGINT NOT NULL,

sample_set_number BIGINT NOT NULL,

connection_number BIGINT NOT NULL,

blocker_owner_table_name VARCHAR ( 257 ) NULL,

blocker_lock_type VARCHAR ( 32 ) NULL,

blocker_owner_name VARCHAR ( 128 ) NULL,

blocker_table_name VARCHAR ( 128 ) NULL,

blocker_reason VARCHAR ( 1000 ) NULL,

blocker_row_identifier VARCHAR ( 32 ) NULL,

current_engine_version VARCHAR ( 1000 ) NOT NULL,

page_size INTEGER NOT NULL,

ApproximateCPUTime DECIMAL ( 30, 6 ) NULL,

BlockedOn BIGINT NULL,

BytesReceived BIGINT NULL,

BytesSent BIGINT NULL,

CacheHits BIGINT NULL,

CacheRead BIGINT NULL,

"Commit" BIGINT NULL,

DiskRead BIGINT NULL,

DiskWrite BIGINT NULL,

FullCompare BIGINT NULL,

IndAdd BIGINT NULL,

IndLookup BIGINT NULL,

Isolation_level BIGINT NULL,

LastReqTime VARCHAR ( 1000 ) NOT NULL

DEFAULT '1900-01-01',

LastStatement VARCHAR ( 1000 ) NULL,

LockCount BIGINT NULL,

LockName BIGINT NULL,

LockTableOID BIGINT NULL,

LoginTime VARCHAR ( 1000 ) NOT NULL

DEFAULT '1900-01-01',

LogWrite BIGINT NULL,

Name VARCHAR ( 128 ) NULL,

NodeAddress VARCHAR ( 1000 ) NULL,

Prepares BIGINT NULL,

PrepStmt BIGINT NULL,

QueryLowMemoryStrategy BIGINT NULL,

QueryOptimized BIGINT NULL,

QueryReused BIGINT NULL,

ReqCountActive BIGINT NULL,

ReqCountBlockContention BIGINT NULL,

ReqCountBlockIO BIGINT NULL,

ReqCountBlockLock BIGINT NULL,

ReqCountUnscheduled BIGINT NULL,

ReqStatus VARCHAR ( 1000 ) NULL,

ReqTimeActive DECIMAL ( 30, 6 ) NULL,

ReqTimeBlockContention DECIMAL ( 30, 6 ) NULL,

ReqTimeBlockIO DECIMAL ( 30, 6 ) NULL,

ReqTimeBlockLock DECIMAL ( 30, 6 ) NULL,

ReqTimeUnscheduled DECIMAL ( 30, 6 ) NULL,

ReqType VARCHAR ( 1000 ) NULL,

RequestsReceived BIGINT NULL,

Rlbk BIGINT NULL,

RollbackLogPages BIGINT NULL,

TempFilePages BIGINT NULL,

TransactionStartTime VARCHAR ( 1000 ) NOT NULL

DEFAULT '1900-01-01',

UncommitOp BIGINT NULL,

Userid VARCHAR ( 128 ) NULL,

previous_ApproximateCPUTime DECIMAL ( 30, 6 ) NOT NULL DEFAULT 0.0,

interval_ApproximateCPUTime AS ( COALESCE ( "ApproximateCPUTime", 0 )

- previous_ApproximateCPUTime ),

previous_Commit BIGINT NOT NULL DEFAULT 0,

interval_Commit AS ( COALESCE ( "Commit", 0 )

- previous_Commit ),

previous_Rlbk BIGINT NOT NULL DEFAULT 0,

interval_Rlbk AS ( COALESCE ( Rlbk, 0 )

- previous_Rlbk ),

PRIMARY KEY ( sample_set_number, connection_number ) );

GO

Figure 4 shows three Windows XP commands used to create the SQL Anywhere database file, start the database and launch the Interactive SQL utility.

Figure 4: Creating the SQL Anywhere Target DatabaseContext: SQL Anywhere

"%SQLANY11%\bin32\dbinit.exe"^

mem.db

"%SQLANY11%\bin32\dbspawn.exe" -f^

"%SQLANY11%\bin32\dbsrv11.exe"^

-im nw^

-c 1200M^

-o dbsrv11_log.txt^

mem.db

"%SQLANY11%\bin32\dbisql.com"^

-c "ENG=mem;DBN=mem;UID=dba;PWD=sql;CON=mem-1"

Line 1 in Figure 4 is the full filespec for the database initialization utility dbinit.exe as installed by a standard SQL Anywhere setup on Windows. Multiple versions of SQL Anywhere often exist on workstation computers so when using command files it is always a good idea to specify full filespecs instead of relying on the system PATH.

Tip: The environment variable SQLANY11 makes it a easier to code filespecs in Windows command files that run SQL Anywhere executables. This environment variable is created by the standard SQL Anywhere 11 setup as follows:

SQLANY11=C:\Program Files\SQL Anywhere 11

Line 2 specifies the file name for the SQL Anywhere database file. The transaction log file name defaults to mem.log, and the page size defaults to 4K which is the best choice for most applications.

Line 4 is optional. It launches the "spawn" program dbspawn.exe, which in turn launches the database server itself (lines 5 through 9) in the background. This is handy when writing command files because without the services of dbspawn.exe, the server program dbsrv11.exe would run in the foreground and not return control to command file until the server was shut down. In other words, without dbspawn, the third command (dbisql.com) wouldn't be executed until after the server was shut down.

The -f parameter on line 4 tells dbspawn to ignore the possibility that other SQL Anywhere servers might be running, and to start this one regardless.

Line 5 is the full filespec for the network version of the SQL Anywhere database server, dbsrv11.exe. Alternatively, you can use "personal server" dbeng11.exe which supports exactly the same features except for these differences:

- dbeng11.exe supports a maximum of ten concurrent connections,

- it uses at most one CPU for request processing, and

- it doesn't support network client/server connections.

Line 6 specifies the "-im nw" option: in-memory never write. This is the most efficient and most extreme version of in-memory operation: Not only is there no transaction log and no temporary file like the other version (-im c or in-memory checkpoint only) but there is no checkpoint log and changes are never written to the database file.

The implications of in-memory operation are quite profound: All the data must fit into the cache, and so must all the temporary data; there is no temporary file to absorb the excess. Plus, it's up to you to save the data if you need it later. You can write SQL code to do this, or use the dbunload.exe utility, but neither the BACKUP statement nor the dbbackup.exe utility will work... well, they'll work, but they won't help: they won't back up the data in memory, just the empty database file.

Line 7 is optional, but recommended for the tests described here. The -c 1200M option specifies an initial database cache size 1200 megabytes. Testing showed that this is a reasonable choice for the data set used in this article, and the whole topic of "picking the initial cache size" is worth some discussion:

SQL Anywhere is renowned for being a self-managing database. Most performance and tuning options have well-chosen defaults, and in most cases SQL Anywhere runs very well out of the box with no configuration changes. The database cache size is no exception: The initial cache size is automatically calculated using a formula that performs well in most circumstances, and the cache is dynamically resized up and down as needs change during execution.

However, launching an empty database using the in-memory mode, and then loading vast quantities of data into the database cache, qualifies as an exception to the above phrase "in most cases". In this case the formula for the initial cache size picks 2 megabytes because the empty database file is very small, and the dynamic resizing process must grow the cache by a factor of 600. This adversely affects performance in two ways: a less-than-optimal internal cache structure is determined by the tiny initial cache size, and the act of growing the cache step-by-step as data is loaded can slow down the loading process itself.

In this particular case, an in-memory database that starts out empty, performance can be improved by taking a guess at the initial cache size, say -c 500M, or even better -c 1G, or as determined by testing, -c 1200M.

Line 8 in Figure 4 is optional but recommended for every production database. The -o parameter specifies the filespec for a text file to receive a copy of all diagnostic messages written by the database server. This output is often called the "console log", not to be confused with the transaction log. Unlike SQL Server, SQL Anywhere does not save these messages anywhere unless you specify -o filespec.

Line 9 specifies the filespec for the database file, in this case mem.db. Unless overridden by other parameters the file name portion determines the runtime "engine name" and "database name" to be used in connection strings: ENG=mem;DBN=mem;

Lines 11 and 12 launch the Interactive SQL utility used to run many of the SQL statements in this article. The -c connection string specifies runtime server or engine name ENG=mem, the runtime database name DBN=mem, the SQL Anywhere user id UID=dba, the password PWD=sql, and an optional connection name CON=mem-1.

Tip: Because SQL Anywhere databases are often isolated and embedded behind other layers of software, the same user id is often used for all database connections and it can be hard to tell one connection from another when debugging problems. Different CON= connection names can be used to alleviate the situation.

Figure 5 shows the SQL Anywhere version of the SQL Server table defined earlier in Figure 3. SQL Anywhere offers a high degree of Transact SQL compatibility so the only syntactic differences are the three computed columns defined on lines 63, 67 and 71 in Figure 5.

Figure 5: Creating the SQL Anywhere Target TableContext: SQL Anywhere

1 CREATE TABLE sa_target (

2 sampling_id BIGINT NOT NULL,

3 ... lines 3 to 60 omitted, identical to Figure 3 ...

61 Userid VARCHAR ( 128 ) NULL,

62 previous_ApproximateCPUTime DECIMAL ( 30, 6 ) NOT NULL DEFAULT 0.0,

63 interval_ApproximateCPUTime DECIMAL ( 30, 6 ) NOT NULL COMPUTE (

64 COALESCE ( "ApproximateCPUTime", 0 )

65 - previous_ApproximateCPUTime ),

66 previous_Commit BIGINT NOT NULL DEFAULT 0,

67 interval_Commit BIGINT NOT NULL COMPUTE (

68 COALESCE ( "Commit", 0 )

69 - previous_Commit ),

70 previous_Rlbk BIGINT NOT NULL DEFAULT 0,

71 interval_Rlbk BIGINT NOT NULL COMPUTE (

72 COALESCE ( Rlbk, 0 )

73 - previous_Rlbk ),

74 PRIMARY KEY ( sample_set_number, connection_number ) );

Technique 1: Download Via BCP and LOAD TABLE

This two-step technique uses BCP (Bulk Copy Program) to unload the SQL Server source table to a text file, and SQL Anywhere's LOAD TABLE statement to load the data from that file into the target table in the in-memory database.

The BCP utility is a popular high-performance workhorse often used to load and unload vast quantities of data to and from SQL Server databases.

On the SQL Anywhere side, similar functionality is provided by the LOAD TABLE and UNLOAD SQL statements.

Figure 6 shows the code for the first step, the Windows command line that executes BCP.

Figure 6: BCP Unload SELECT to Text FileContext: SQL Server

"c:\Program Files\Microsoft SQL Server\100\Tools\Binn\bcp.exe"^

"SELECT * FROM main.dbo.mss_source"^

queryout "\\PAVILION2\C\data\main\mss_source.txt"^

-c^

-t $#$\t^

-r $#$\n^

-P j68Fje9#fyu489^

-S BRECK-PC\TSUNAMI^

-U sa

Line 1 in Figure 6 is the full filespec for bcp.exe as installed by a standard SQL Server 2008 setup on Windows.

Line 2 selects all the rows and columns the table to be unloaded: mss_source, owned by "dbo", located in the database "main". BCP is a bit restrictive when it comes to coding the SELECT: it must be enclosed in "double quotes" and appear all on one line; it can't be written on multiple lines even if you use continuation characters.

Line 3 specifies "queryout" for output (as opposed to "in" for input), and specifies the full output filespec. This filespec is relative to the computer running SQL Server itself, not the computer running the BCP utility. In this case, BCP is being run on the target laptop computer, not the server computer, and the text file is being written to the laptop... but it doesn't matter where BCP itself is running, it matters where the target file resides relative to the server. That's why the filespec is written using the "\\server\share\..." Universal Naming Convention format, so that SQL Server will write the data across the network to the PAVILION2 computer.

Line 4 specifies that the -c for "character" format is to be used for the data written to the text file. A -n for "native" format is also available but it's meant for target tables stored on other SQL Server databases; it cannot be used with SQL Anywhere.

Line 5 specifies the -t string to placed between each column value in the output text file. The documentation calls it a "field terminator" but it's really a separator because it doesn't appear after the last column value on each line. The default separator string is the single tab character (hexadecimal 09, or \t in SQL-Server-speak), but since the data contains tab characters something different must be chosen as a terminator. The -t string $#$\t means dollar sign, number sign, dollar sign and tab, and it corresponds to the SQL Anywhere DELIMITED BY '$#$\x09' clause in the LOAD TABLE statement shown later.

Line 6 specifies the -r "row terminator" string to be placed at the end of each row of data in the output text file. The default is the "newline" character, which corresponds to the carriage return - line feed pair (CR-LF or hexadecimal 0D0A) in Windows. Once again, the data contains all sorts of special characters including CR-LFs, so a different -r string is specified: $#$\n means dollar sign, number sign, dollar sign and CR-LF. This corresponds to the SQL Anywhere clause ROW DELIMITED BY '$#$\x0D\x0A'.

Lines 7, 8 and 9 specify the password, server and userid for BCP's connection to SQL Server.

Figure 7 shows how lines 2 and 3 in Figure 6 may be changed to specify a table name rather than a SELECT when you want all the rows and columns. With this simpler but less flexible table name syntax, you also have to change the "queryout" on line 3 to "out".

Figure 7: Alternative BCP Unload Table to Text FileContext: SQL Server

"c:\Program Files\Microsoft SQL Server\100\Tools\Binn\bcp.exe"^

main.dbo.mss_source^

out "\\PAVILION2\C\data\main\mss_source.txt"^

-c^

-t $#$\t^

-r $#$\n^

-P j68Fje9#fyu489^

-S BRECK-PC\TSUNAMI^

-U sa

Figure 8 shows that the BCP step in the earlier Figure 6 unloaded all 1.9 million rows in about 7.4 minutes. The output text file was about 985M in size.

Figure 8: BCP Unload Display OutputContext: SQL Server

Starting copy...

1000 rows successfully bulk-copied to host-file. Total received: 1000

1000 rows successfully bulk-copied to host-file. Total received: 2000

1000 rows successfully bulk-copied to host-file. Total received: 3000

1000 rows successfully bulk-copied to host-file. Total received: 4000

...

1000 rows successfully bulk-copied to host-file. Total received: 1920000

1000 rows successfully bulk-copied to host-file. Total received: 1921000

1000 rows successfully bulk-copied to host-file. Total received: 1922000

1000 rows successfully bulk-copied to host-file. Total received: 1923000

1000 rows successfully bulk-copied to host-file. Total received: 1924000

1000 rows successfully bulk-copied to host-file. Total received: 1925000

1925469 rows copied.

Network packet size (bytes): 4096

Clock Time (ms.) Total : 446469 Average : (4312.66 rows per sec.)

Figure 9 shows the SQL Anywhere command that loads the file created by BCP in the earlier Figure 6. This step ran in about 4.6 minutes to load all 1.9 million rows into memory. As discussed in the previous section, if the initial cache size parameter -c 1200M is omitted from the SQL Anywhere server command line in Figure 4 the LOAD TABLE in Figure 9 slows down dramatically, to over 11 minutes from less than five.

Figure 9: SQL Anywhere LOAD TABLEContext: SQL Anywhere

LOAD TABLE sa_target

FROM 'c:/data/main/mss_source.txt'

DELIMITED BY '$#$\x09'

ESCAPES OFF

QUOTES OFF

ROW DELIMITED BY '$#$\x0D\x0A';

Line 1 in Figure 9 specifies the target table name to be loaded, and line 2 specifies the filespec of the input file.

Tip: The forward slashes "/" in a string literal are interpreted as backslashes "\" when SQL Anywhere processes a filespec on Windows. This usage avoids any confusion with SQL Anywhere's interpretation of the backslash as an escape character in string literals; i.e., \n is usually interpreted as a new line character whereas /n is not. Note that this interpretation of "/" as "\" is a special trick that only applies to filespecs; in other contexts, a forward slash is treated as a forward slash.

Line 3 in Figure 9 is SQL Anywhere's syntax for a column separator string that agrees with the SQL Server syntax shown on line 5 in Figure 6: a dollar sign, number sign, dollar sign and a tab character represented by the hexadecimal escape string \x09.

Line 4 tells SQL Anywhere that SQL Server doesn't transform special characters into escape strings using the backslash character; e.g., if the input string contains a tab character, it will contain the actual single tab character rather than the hexadecimal escape string \x09.

Line 5 tells SQL Anywhere that SQL Server doesn't put 'single quotes' or "double quotes" around string values.

Line 6 is SQL Anywhere's syntax for a row terminator string that agrees with the SQL Server syntax shown on line 6 in Figure 6: a dollar sign, number sign, dollar sign followed by a carriage return and line feed represented by the hexadecimal escape strings \x0D and \x0A.

-- [to be continued] --